Motive API: Quick Start Guide

An overview of the Motive API.

Overview

SDK/API Support Disclaimer

OptiTrack provides developer tools to enable our customers across a broad set of applications to utilize their systems in the ways that best suit them. Our Motive API through the NatNet SDK and Camera SDK is designed to enable experienced software developers to integrate data transfer and/or system operation with their preferred systems and pipelines. Sample projects are provided with each tool, and we strongly recommend users reference or use the samples as reliable starting points.

The following list specifies the range of support OptiTrack provides for the SDK and API tools:

Using the SDK/API tools requires background knowledge of software development. We do not provide support for basic project setup, compiling, and linking when using the SDK/API to create your own applications.

We ensure the SDK tools and their libraries work as intended. We do not provide support for custom developed applications that have been programmed or modified by users using the SDK tools.

Ticketed support will be provided for licensed Motive users using the Motive API and/or the NatNet SDK tools from the included libraries and sample source codes only.

The Camera SDK is a free product. We do not provide free ticketed support for it.

For other questions, please check out the NaturalPoint forums. Very often, similar development issues are reported and solved there.

This guide provides detailed instructions for commonly used functions of the Motive API for developing custom applications. For a full list of functions, refer to the Motive API: Function Reference page. For a sample use case of the API functions, please check out the provided marker project.

In this guide, the following topics will be covered:

Library files and header files

Initialization and shutdown

Capture setup (Calibration)

Configuring camera settings

Updating captured frames

3D marker tracking

Rigid body tracking

Data streaming

Environment Setup

Library Files

When developing a Motive API project, the linker needs to know where to find the required library files. Do this either by specifying its location within the project or by copying these files to the project folder.

MotiveAPI.h

Motive API libraries (.lib and .dll) are in the lib folder within the Motive install directory, located by default at C:\Program Files\OptiTrack\Motive\lib. This folder contains library files for both 64-bit (MotiveAPI.dll and MotiveAPI.lib) platforms.

When using the API library, all of the required DLL files must be located in the executable directory. If necessary, copy and paste the MotiveAPI.dll file into the folder with the executable file.

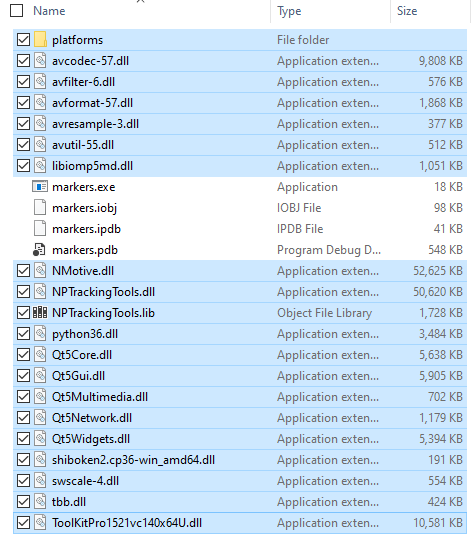

Third-party Libraries

Additional third-party libraries are required for Motive API, and most of the DLL files for these libraries can be found in the Motive install directory

C:\Program Files\OptiTrack\Motive\. Copy and paste all of the DLL files from the Motive installation directory into the directory of the Motive API project to use them. Highlighted items in the below image are all required DLL files.Lastly, copy the

C:\Program Files\OptiTrack\Motive\plugins\platformsfolder and its contents into the EXE folder since those libraries will also be used.

Header Files

Function declarations and classes are contained in the header file MotiveAPI.h, located in the folder C:\Program Files\OptiTrack\Motive\inc\.

Always include the directive syntax for adding the MotiveAPI.h header file for all programs that are developed against the Motive API.

Note: You can define this directory by using the MOTIVEAPI_INC, MOTIVEAPI_LIB environment variables. Check the project properties (Visual Studio) of the provided marker project for a sample project configuration.

Motive Files

Motive API, by default, loads the default calibration (MCAL) and Application profile (MOTIVE) files from the program data directory unless otherwise specified. Motive also loads these files at startup. They are located in the following folders:

Default System Calibration: C:\ProgramData\OptiTrack\Motive\System Calibration.mcal

Default Application Profile: C:\ProgramData\OptiTrack\MotiveProfile.motive

Both files can be exported and imported into Motive as needed for the project:

The application profile can be imported using the LoadProfile function to obtain software settings and trackable asset definitions.

The Calibration file can be imported using the LoadCalibration function to ensure reliable 3D tracking data is obtained.

Initialization and Shutdown

When using the API, connected devices and the Motive API library need to be properly initialized at the beginning of a program and closed down at the end.

Initialization

To initialize all of the connected cameras, call the Initialize function. This function initializes the API library and gets the cameras ready to capture data, so always call this function at the beginning of a program. If you attempt to use the API functions without initializing first, you will get an error.

Motive Profile Load

Initialize loads the default Motive profiles (MOTIVE) from the ProgramData directory during the initialization process. To load a Motive profile from a different directory, use the LoadProfile function.

Update

The Update function is primarily used for updating captured frames, but it can also be called to update a list of connected devices. Call this function after initialization to make sure all of the newly connected devices are properly initialized in the beginning.

Shutdown

When exiting out of a program, call the Shutdown function to completely release and close all connected devices. Cameras may fail to shut down completely if this function is not called.

Setup the Project

Motive Application Profile

The Motive application profile (MOTIVE) stores the following critical information:

All the trackable assets involved in a capture;

Software configurations including application settings and data streaming settings.

When using the API, we recommend first configuring settings and defining the trackable assets in Motive, then exporting the profile MOTIVE file, to load by calling the LoadProfile function. This allows you to adjust the settings for your needs in advance without having to configure individual settings through the API.

Camera Calibration

Cameras must be calibrated to track in 3D space. Because camera calibration is a complex process, it's easier to calibrate the camera system from Motive, export the camera calibration file (MCAL), then load the exported file into custom applications that are developed against the API.

Once the calibration data is loaded, the 3D tracking functions can be used. For detailed instructions on camera calibration in Motive, please read through the Calibration page.

Loading Calibration

In Motive, calibrate the camera system using the Calibration pane. Follow the Calibration page for details.

After the system has been calibrated, export the calibration file (MCAL) from Motive.

Using the API, Import the calibration to your custom application by calling the LoadCalibration function.

When successfully loaded, you will be able to obtain 3D tracking data using the API functions.

Calibration Files: When using an exported calibration file, make sure it remains a valid calibration. The file will no longer be valid if any aspect of the system setup has been altered after the calibration, including any quality degradation that can over time due to environmental factors. For this reason, we recommend re-calibrating the system routinely to guarantee the best tracking quality.

Tracking Bars: camera calibration is not required for tracking 3D points.

Camera Settings

Connected cameras are accessible by index numbers, which are assigned in the order the cameras are initialized. Most API functions for controlling cameras require the camera's index value.

When processing all of the cameras, use the CameraCount function to obtain the total camera count and process each camera within a loop. To point to a specific camera, use the CameraID function to check and use the camera with its given index value.

This section covers Motive API functions to check and configure camera frame rate, camera video type, camera exposure, pixel brightness threshold, and IR illumination intensity.

Fetching Camera Settings

Camera settings are also located in the Devices pane of Motive. For more information on each of these camera settings, refer to the Devices pane page.

Configuring Settings

Use the SetCameraProperty function to configure properties outlined below.

CameraNodeCameraEnabled

A Boolean value to indicate whether the camera is enabled (true) or disabled (false).

Corresponds to the Enabled setting in Motive's Camera Properties.

CameraNodeReconstructionEnabled

A Boolean value to indicate whether the selected camera will contribute to the real-time reconstruction of 3D data. Set the value to true to enable or false to disable.

Corresponds to the Reconstruction setting in Motive's Camera Properties.

CameraNodeImagerPixelSize

Length and width (in pixels) of the camera imager.

Corresponds to the Pixel Dimensions value in Motive's Camera Properties

CameraNodeCameraVideoMode

An Integer value that sets the video mode for the selected camera.

Segment

0

Grayscale

1

Object

2

Precision

4

MJPEG

6

Color Video

9

Corresponds to the Video Mode setting in Motive's Camera Properties.

Please see the Camera Video Types page for more information on video modes.

CameraNodeCameraExposure

An integer value that sets the exposure for the selected camera.

Corresponds to the Exposure setting in Motive's Camera Properties.

CameraNodeCameraThreshold

An integer value that sets the minimum brightness threshold for pixel detection for the selected camera. Valid threshold range is 0 - 255.

Corresponds to the Threshold setting in Motive's Camera Properties.

CameraNodeCameraLED

A Boolean value to indicate whether the camera's LED light are enabled (true) or disabled (false).

Corresponds to the LED setting in Motive's Camera Properties.

CameraNodeCameraIRFilterEnabled

A Boolean value to indicate whether the camera's IR filter is enabled (true) or disabled (false).

Corresponds to the IR Filter setting in Motive's Camera Properties.

CameraNodeCameraGain

An integer value that sets the imager gain for the selected camera.

Corresponds to the Gain setting in Motive's Camera Properties.

CameraNodeCameraFrameRate

An integer value that sets the frame rate for the selected camera.

Applicable values vary based on camera models. Refer to the hardware specifications for the selected camera type to determine the frame rates at which it can record.

Corresponds to the Frame Rate setting in Motive's Camera Properties.

CameraNodeCameraMJPEGQuality

An integer value that sets the video quality level of MJPEG mode for the selected camera.

Minimum Quality

0

Low Quality

1

Standard Quality

2

High Quality

3

Corresponds to the MJPEG Quality setting in Motive's Camera Properties.

CameraNodeCameraMaximizePower

A Boolean value to indicate whether High Power mode is enabled (true) or disabled (false) for the Slim 3U and Flex 3 cameras only.

Corresponds to the Maximize Power setting in Motive's Camera Properties.

CameraNodeBitrate

An integer value that sets the bitrate for the selected camera.

Corresponds to the Bitrate setting in Motive's Camera Properties.

CameraNodePartition

An integer value that sets the bitrate for the selected camera.

Corresponds to the Bitrate setting in Motive's Camera Properties.

CameraNodeFirmwareVersion

A string value that displays the Firmware version of the selected camera.

Corresponds to the Firmware Version property in Motive's Camera Properties.

CameraNodeLogicVersion

A string value that displays the Logic version of the selected camera.

Corresponds to the Logic Version property in Motive's Camera Properties.

Other Settings

There are other camera settings, such as imager gain, that can be configured using the Motive API. Please refer to the Motive API: Function Reference page for descriptions on other functions.

Updating the Frames

To process multiple consecutive frames, call the functions Update or UpdateSingleFrame repeatedly within a loop. In the example below, the Update function is called within a while loop as the frameCounter variable is incremented:

Update vs. UpdateSingleFrame

At the most fundamental level, these two functions both update the incoming camera frames, but may act differently in certain situations. When a client application stalls momentarily, it can get behind on updating the frames and the unprocessed frames may accumulate. In this situation, these two functions behave differently.

The Update function disregards accumulated frames and services only the most recent frame data. The client application will not receive the previously missed frames to process.

The UpdateSingleFrame function ensures only one frame is processed each time the function is called. If there are significant stalls in the program, using this function may result in accumulated processing latency.

In general, we recommend using the Update function. Only use UpdateSingleFrame in the case when you need to ensure the client application has access to every frame of tracking data and you are not able to call Update in a timely fashion.

3D Marker Tracking

After loading a valid camera calibration, you can use API functions to track retroreflective markers and get their 3D coordinates. Since marker data is obtained for each frame, always call the Update or the UpdateSingleFrame function each time newly captured frames are received.

You can use the MarkerCount function to obtain the total marker count and use this value within a loop to process all of the reconstructed markers.

Marker Index

In a given frame, each reconstructed marker is assigned a marker index number, which is used to point to a particular reconstruction within a frame. Marker index values may vary between different frames, but unique identifiers always remain the same.

Marker Position

For obtaining 3D position of a reconstructed marker, use the MarkerXYZ function

Rigid Body Tracking

This section covers functions for tracking Rigid Bodies using the Motive API.

To track the 6 degrees of freedom (DoF) movement of an undeformable object, attach a set of reflective markers to it and use the markers to create a trackable Rigid Body asset.

Please read the Rigid Body Tracking page for detailed instructions on creating and working with Rigid Body assets in Motive.

There are two methods for obtaining Rigid Body assets when using the Motive API:

Import existing Rigid Body data.

Define new Rigid Bodies using the CreateRigidBody function.

Once Rigid Body assets are defined, Rigid Body tracking functions can be used to obtain the 6 DoF tracking data.

Importing Rigid Body Assets

Let's go through importing RB assets into a client application using the API. In Motive, Rigid Body assets can be created from three or more reconstructed markers, and all of the created assets can be exported out into either application profile (MOTIVE) Each Rigid Body asset saves marker arrangements when it was first created. As long as the marker locations remain the same, you can use saved asset definitions for tracking respective objects.

Exporting all RB assets from Motive:

Exporting application profile: File → Save Profile

Exporting individual RB asset:

Exporting Rigid Body file (profile): Under the Assets pane, right-click on a RB asset and click Export Rigid Body

When using the API, you can load exported assets by calling the LoadProfile function for application profiles and the LoadRigidBodies or AddRigidBodes function. When importing profiles, the LoadRigidBodies function will entirely replace the existing Rigid Bodies with the list of assets from the loaded profile. On the other hand, AddRigidBodes will add the loaded assets onto the existing list while keeping the existing assets. Once Rigid Body assets are imported into the application, the API functions can be used to configure and access the Rigid Body assets.

Creating New Rigid Body Assets

Rigid body assets can be defined directly using the API. The CreateRigidBody function defines a new Rigid Body from given 3D coordinates. This function takes in an array float values which represent x/y/z coordinates or multiple markers in respect to Rigid Body pivot point. The float array for multiple markers should be listed as following: {x1, y1, z1, x2, y2, z2, …, xN, yN, zN}. You can manually enter the coordinate values or use the MarkerXYZ function to input 3D coordinates of tracked markers.

When using the MarkerXYZ function, you need to keep in mind that these locations are taken in respect to the RB pivot point. To set the pivot point at the center of created Rigid Body, you will need to first compute pivot point location, and subtract its coordinates from the 3D coordinates of the markers obtained by the MarkerXYZ function. This process is shown in the following example.

Example: Creating RB Assets

Rigid Body 6 DoF Tracking Data

6 DoF Rigid Body tracking data can be obtained using the RigidBodyTransform function. Using this function, you can save 3D position and orientation of a Rigid Body into declared variables. The saved position values indicate location of the Rigid Body pivot point, and they are represented in respect to the global coordinate axis. The Orientation is saved in both Euler and Quaternion orientation representations.

Example: RB Tracking Data

Rigid Body Properties

In Motive, Rigid Body assets have Rigid Body properties assigned to each of them. Depending on how these properties are configured, display and tracking behavior of corresponding Rigid Bodies may vary.

For detailed information on individual Rigid Body settings, read through the Properties: Rigid Body page.

Data Streaming

Once the API is successfully initialized, there are two methods of data streaming available.

Stream over NatNet

The StreamNP function enables/disables data streaming via the NatNet SDK. This client/server networking SDK is designed for sending and receiving OptiTrack data across networks, and can be used to stream tracking data from the API to client applications from various platforms.

Once the data streaming is enabled, connect the NatNet client application to the server IP address to start receiving the data.

The StreamNP function is equivalent to Broadcast Frame Data from the Data Streaming pane in Motive.

Stream over VRPN

Mocap data can be livestreamed through the Virtual Reality Peripheral Network (VRPN) using the StreamVRPN function.

Please see the VRPN Sample page for information on working with the OptiTrack VRPN sample.

Data Streaming Settings

The Motive API does not support data streaming configuration directly from the API. These properties must be set in Motive.

In Motive, configure the streaming server IP address and other data streaming settings. See the Data Streaming page for more information.

Export the Motive profile (MOTIVE file) that contains the desired configuration.

Load the exported profile through the API.

Last updated

Was this helpful?