Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

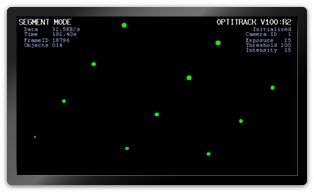

The Camera SDK provides hardware (cameras and hubs) controls and access to the most fundamental frame data, such as grayscale images and 2D object information, from each camera. Using the Camera SDK, you can develop your own image processing applications that utilize the capabilities of the OptiTrack cameras. The Camera SDK is a free tool that can be downloaded from our website.

Note: 3D tracking features are not directly supported with Camera SDK but they are featured via the Motive API. For more information on the Camera SDK, visit our website.

The Camera SDK is compatible only with the same released version of Motive. For instance, if you are using Motive 3.1.1, download and use the Camera SDK version 3.1.1.

Camera hardware controls

Receiving frame data and 2D object data from each camera

Device synchronization controls

Sample applications with source code

After you install the Camera SDK, there will be a folder in your OptiTrack installation directory. This folder can also be accessed from Windows start menu → OptiTrack → Camera SDK:

(\OptiTrack\CameraSDK\bin) Includes an executable sample application, visualtest.exe, which was developed using the Camera SDK. This sample application allows you to configure camera settings and monitor captured 2D frames from each camera.

(\OptiTrack\CameraSDK\lib) Includes native C++ application construction library.

(\OptiTrack\CameraSDK\include) Includes header files for the SDK. Usage of each class is commented within the header files.

(\OptiTrack\CameraSDK\doc) Includes topic specific instructions on how to utilize the Camera SDK.

(\OptiTrack\CameraSDK\samples) Includes sample projects that employ the Camera SDK. Source code for these applications are included for additional references.

To attach cCameraModule instances to a camera object using Motive API, call the following functions:

AttachTo

To attach

RemoveFrom

To detach

An introduction to the Motive API.

Important Note:

The Motive API documentation is being updated for 3.1 and some of the functions may not yet be in the documentation. Please refer to the MotiveAPI header file for any information that is not included in the online user guide.

The Motive API allows access to and control of the backend software platform for Motive via a C/C++ interface, performing Motive functions without the graphical user interface on top. With the API, users can employ several features of Motive in custom applications, such as accessing 2D camera images, marker centroid data, unlabeled 3D points, labeled markers, and Rigid Body tracking data.

All of the required components for utilizing the API are included in the Motive install directory when the application is installed. The key files for using the Motive API are listed in the below section.

Camera control

Frame control

Point Cloud reconstruction engine control

Ability to obtain and use reconstructed 3D Marker data

Rigid body tracking

Query results

Ability to stream results over the network

In-depth hardware control (e.g. hardware sync customization). Use the Camera SDK for this.

Direct support for data recording and playback.

Control over peripheral devices (Force plates and NI-DAQ).

Functionality for Skeleton assets.

The Motive API is supported in Windows only

Must have a valid Motive license and a corresponding Hardware key.

When Motive is installed, all of the required components of the Motive API are placed in folders within the installation directory. By default, this directory is C:\Program Files\OptiTrack\Motive.

The following section describes the key files of the API and where each is located.

[Motive Install Directory]\inc\MotiveAPI.h

The header file MotiveAPI.h contains declarations for functions and classes of the API. Necessary functions and classes are thoroughly commented within this file. This header file must be included in the source code for utilizing the Motive API functions.

[Motive Install Directory]\lib

This folder includes C++ 64-bit library files (.lib and .dll) for employing the Motive API.

The library is compiled using Visual Studio 2019 with the dynamic run-time (\MD) library, so make sure the client application also uses the same settings.

[Motive Install Directory]\Samples\MotiveAPI\

Samples in a Visual Studio project (samples.sln) for accessing Motive functionality such as cameras, markers, and Rigid Body tracking information. Refer to this folder to find out how the API can be used.

[Motive Install Directory]\plugins

The platforms folder contains qwindows.dll, which is required for running applications using the Motive API. Copy and paste this folder into the EXE directory.

[Motive Install Directory]

Third-party DLL libraries are required for all applications built against the API. Please see Motive API: Quick Start Guide for more information.

This guide introduces some of the commonly used functions of the Motive API.

A reference guide for Motive API functions, including code samples.

Many of the Motive API functions return their results as integer values defined as an eResult. This value expresses the outcome of the result. eResult values indicate if the function operated successfully, and if not, they provide detailed information on the type of error that occurred.

When you get the eResult output from a function, you can use the MapToResultString function to get the plain text result that corresponds to the error message.

Camera video types, or image processing modes, are expressed as integer values as well. These values are listed below and are also commented within the header file.

eResult Values

Camera Video Type Definitions

This page provides function and class references of the NatNet SDK library.

The NatNetClient class (or NatNetClientML from the managed assembly) is the key object of the SDK. An instance of this client class allows an application to connect to a server application and query data. API helper functions are provided with the C++ library for a more convenient use of the SDK tools. For additional information, refer to the provided headers files (native) or reference the NatNatML.dll file (managed).

Note:

NatNet SDK is backwards compatible.

Deprecated methods from previous SDK versions are not documented on this page, and their use in new applications is discouraged. They are subject to removal in a future version of the SDK. Refer to the header files for complete descriptions.

The NatNetServer class has been deprecated for versions 3.0 and above.

Note that some parts of the managed .NET assembly may be slightly different from the native library reference provided here. Refer to the NatNetML.dll file using an object browser for detailed information.

Most of the NatNet SDK functions return their operation results in an integer type representation named ErrorType, which is just an enumerator that describes operation results as the following:

ErrorCode_OK

0

Operation successful

ErrorCode_Internal

1

Suspect internal errors. Contact support.

ErrorCode_External

2

External errors. Make sure correct parameters are used for input arguments when calling the methods.

ErrorCode_Network

3

The error occurred on the network side.

ErrorCode_Other

4

Unlisted error is conflicting the method call.

ErrorCode_InvalidArgument

5

Invalid input arguments have been inputted.

ErrorCode_InvalidOperation

6

Invalid operation.

The NatNetClient class is the main component of the NatNet SDK. Using an instance of the NatNetClient class, you can establish a network connection with a server application (e.g. Motive) and query data descriptions, tracking data, and send/receive remote commands. For detailed declarations, refer to the NatNetClient.h header file included in the SDK.

NatNetClient::NatNetClient()

Constructor: Creates a new instance of a NatNetClient class. Defaults to multicast connection if no input is given.

NatNetClient::NatNetClient(iConnectionType)

Constructor: Creates a new instance of a NatNet Client using the specified connection protocol; either unicast or multicast.

Input: iConnectionType: (0 = Multicast, 1 = Unicast).

This approach is being deprecated. The NatNetClient class now determines the connection type through sNatNetClientConnectParams input when calling the NatNetClient::Connect method.

NatNetClient::~NatNetClient()

Destructor: Destructor

Description

This method connects an instantiated NatNetClient object to a server application (e.g. Motive) at the inputted IP address.

Input Parameters:

Connection parameters object.

Returns:

ErrorCode, On success, it returns 0 or ErrorCode_OK.

sNatNetClientConenectParams:

Declared under the NatNetTypes.h file.

Local address. IP address of the localhost where the client application is running.

Server address. IP address where the server application is streaming to.

(Optional) Command port. Defaults to 1510.

(Optional) Data port. Defaults to 1511.

(Optional) Multicast IP address. Defaults to 239.255.42.99:1511.

Description

Calling this method disconnects the client from the Motive server application.

Input Parameters:

None

Returns:

ErrorCode, On success, it returns 0 or ErrorCode_OK.

Description

This method sets a frame handler function and creates a new thread for receiving and processing each frame of capture data.

Managed Assembly: Use OnFrameReady event type to add a function delegate.

Input Parameters:

pfnDataCallback: A NatNetFrameReceivedCallback function. NatNetFrameReceivedCallback is a type of a pointer to a frame handler function which processes each incoming frame of tracking data. Format of the inputted function must agree with the following type definition:

typedef void (NATNET_CALLCONV* NatNetFrameReceivedCallback)(sFrameOfMocapData* pFrameOfData, void* pUserData);

User definable data: the Client object.

Returns:

ErrorCode, On success, it returns 0 or ErrorCode_OK.

Description

Sends a NatNet command to the NatNet server and waits for a response. See NatNet: Remote Requests/Commands for more details.

Input Parameters:

szRequest: NatNet command.

tries: Number of attempts to send the command. Default: 10.

timeout: Number of milliseconds to wait for a response from the server before the call times out. Default: 20.

ppServerResponse: Application defined response.

pResponseSize: Number of bytes in response

Returns:

ErrorCode, On success, it returns 0 or ErrorCode_OK.

Description

Requests a description of the current NatNet server that a client object is connected to and saves it into an instance of sServerDescription. This call is blocked until the request is responded or times out.

Input Parameters:

Declared sServerDescription object.

Returns:

ErrorCode, On success, it returns 0 or ErrorCode_OK.

Description

Requests a list of dataset descriptions of the capture session and saves onto the declared instance of sDataDescriptions.

Input Parameters:

Pointer to an sDataDescriptions pointer which receives the address of the client's internal sDataDescriptions object. This pointer is valid until the client is destroyed or until the next call to GetDataDescriptions.

Returns:

ErrorCode, On success, it returns 0 or ErrorCode_OK.

Description

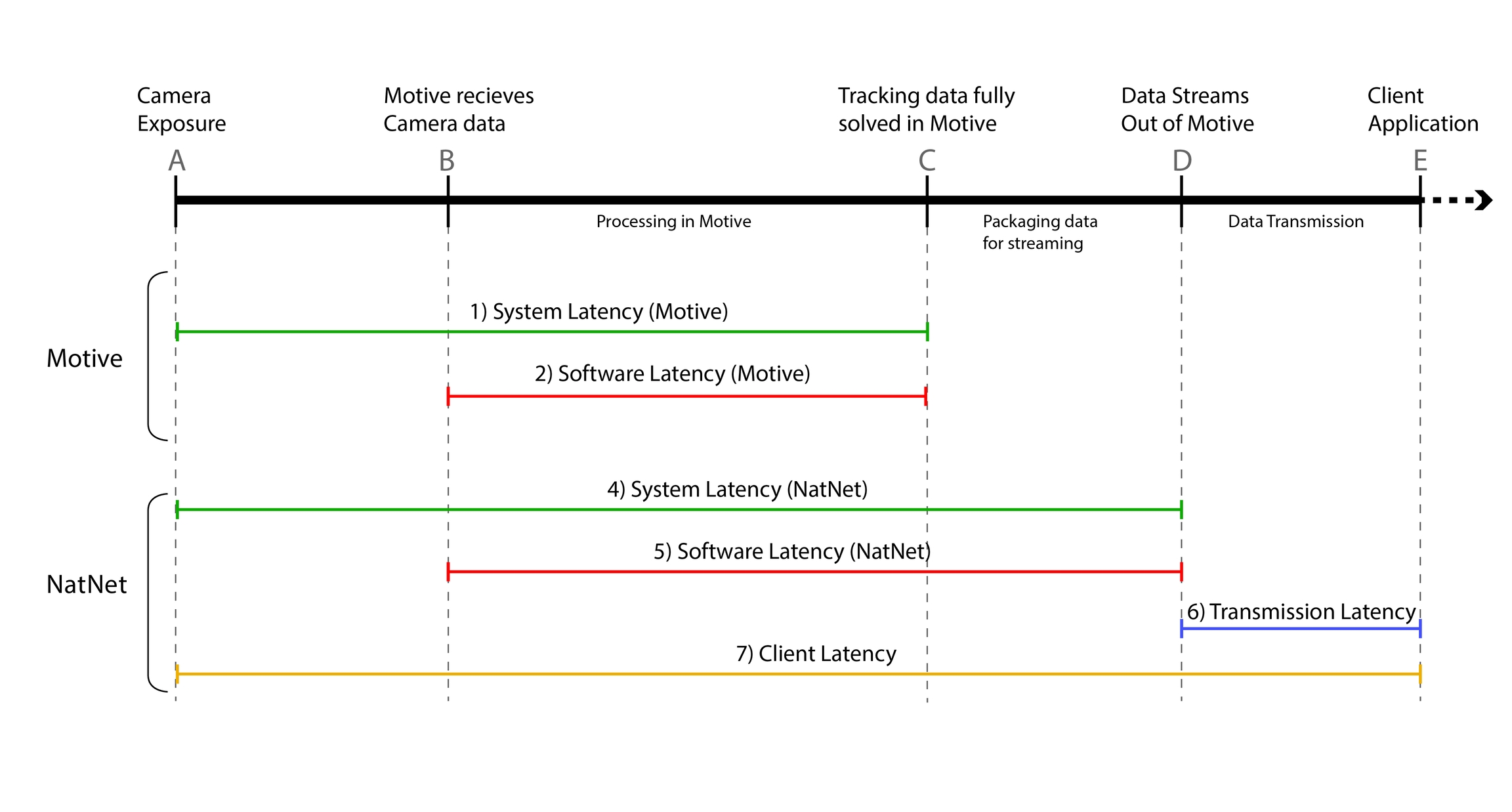

This method calculates and returns the time difference between a specific event in the processing pipeline and when the NatNet client application receives the tracking data. For example, if sFrameOfMocapData::CameraMideExposureTimestamp is inputted, it will return the latency from the camera exposure to when the tracking data is received. For more information on how it is used, read through the Latency Measurements page.

Input Parameters:

(uint64_t) A timestamp value from a sFrameOfMocapData struct.

Returns:

(double) The time, in seconds, past since the provided timestamp.

Once the NatNetSDK library has been imported into a client application, the following helper functions can be used.

These functions are available ONLY for C++ applications.

Description

This function gets the version (#.#.#.#) of the NatNet SDK and saves it into an array.

Input Parameters:

Unsigned char array with a array length of 4.

Returns:

Void

Description

This function assignes a callback handler function for receiving and reporting error/debug messages.

Input Parameters:

pfnLogCallback: NatNetLogCallback function. NatNetLogCallback is a type of a pointer to a callback function that is used to handle the log messages sent from the server application. Format of the linked function must agree with the following type definition:

typedef void (NATNET_CALLCONV* NatNetLogCallback)(Verbosity level, const char* message);

Returns:

Void

Description

Takes an ID of a data set (a marker, a Rigid Body, a Skeleton, or a force plate), and decodes its model ID and member ID into the provided integer variables. For example, ID of a Skeleton bone segment will be decoded into its model ID (Skeleton) and Rigid Body ID (bone). See NatNet: Data Types.

Input Parameters:

An ID value for a respective data set (sRigidBodyData, sSkeletonData, sMarker, or sFrocePLateData) from a sFrameOfMocapData packet.

Pointer to declared integer value for saving the entity ID and the member ID (e.g. Skeleton ID and its bone Rigid Body ID).

Returns:

Void

Description

Helper function to decode OptiTrack timecode data into individual components.

Input Parameters:

Timecode integer from a packet of sFrameOfMocapData. (timecode)

TimecodeSubframe integer from a packet of sFrameOfMocapData. (timecodeSubframe)

Pointers to declared integer variables for saving the hours (pOutHour), minutes (pOutMinute), seconds (pOutSecond), frames (pOutFrame), and subframes (pOutSubframe) values.

Returns:

ErrorCode, On success, it returns 0 or ErrorCode_OK.

Description

Helper function to parse OptiTrack timecode into a user friendly string in the form hh:mm:ss:ff:yy

Input Parameters:

timecode: Timecode integer from a packet of sFrameOfMocapData. (timecode)

timecodeSubframe: TimecodeSubframe integer from a packet of sFrameOfMocapData. (timecodeSubframe)

outBuffer: Declared char for saving the output.

outBufferSize: size of the character array buffer (outBuffer).

Returns:

ErrorCode, On success, it returns 0 or ErrorCode_OK.

Description

This helper function performs a deep copy of frame data from pSrc into pDst. Some members of pDst will be dynamically allocated; use NatNet_FreeFrame( pDst ) to clean them up.

Input Parameters:

Pointer to two sFrameOfMocapData variables to copy from (pSrc) and copy to (pDst).

Returns:

ErrorCode, On success, it returns 0 or ErrorCode_OK.

Description

Frees the dynamically allocated members of a frame copy created using NatNet_CopyFrame function. Note that the object pointed to by pFrame itself is NOT de-allocated, but only its nested members which were dynamically allocated are freed.

Input Parameters:

sFrameOfMocapData that has been copied using the NatNet_CopyFrame function.

Returns:

ErrorCode, On success, it returns 0 or ErrorCode_OK.

Do not call this on any pFrame data that was not the destination of a call to NatNet_CopyFrame.

Description

Deallocates data descriptions pDesc and all of its members; after this call, this object is no longer valid.

Input Parameters:

Data descriptions (sDataDescriptions).

Returns:

ErrorCode, On success, it returns 0 or ErrorCode_OK.

Description

Sends broadcast messages to discover active NatNet servers and blocks for a specified time to gather responses.

Input Parameters:

outServers: An array of length equal to the input value of pInOutNumServers. This array will receive the details of all servers discovered by the broadcast.

pInOutNumServers: A pointer to an integer containing the length of the array. After this function returns, the integer is modified to contain the total number of servers that responded to the broadcast inquiry. If the modified number is larger than the original number passed to the function, there was insufficient space for those additional servers.

timeoutMillisec: Amount of time, in milliseconds, to wait for server responses to the broadcast before returning.

Returns:

ErrorCode, On success, it returns 0 or ErrorCode_OK.

Description

Begin sending periodic broadcast messages to discover active NatNet servers in the background.

Input Parameters:

pOutDiscovery: Out pointer that will receive a handle representing the asynchronous discovery process. The handle returned should be passed to NatNet_FreeAsyncServerDiscovery method later for clean up.

pfnCallback: A NatNetServerDiscoveryCallback function pointer that will be invoked once for every new server that's discovered by the asynchronous search. The callback will also be passed onto the provided pUserContext argument.

pUserContext: User-specified context data to be passed to the provided pfnCallback when invoked.

Returns:

ErrorCode, On success, it returns 0 or ErrorCode_OK.

Description

Begin sending periodic broadcast messages to continuously search and discover active NatNet servers in the background.

Input Parameters:

pOutDiscovery: Out pointer that will receive a handle representing the asynchronous discovery process. The handle returned should be passed to NatNet_FreeAsyncServerDiscovery method later for clean up.

pfnCallback: A NatNetServerDiscoveryCallback function pointer that will be invoked once for every new server that's discovered by the asynchronous search. The callback will also be passed onto the provided pUserContext argument.

pUserContext: User-specified context data to be passed to the provided pfnCallback when invoked.

Returns:

ErrorCode, On success, it returns 0 or ErrorCode_OK.

This page is created to guide help users migrating their NatNet projects onto NatNet 3.0 libraries.

NatNet 3.0 no longer allows static linking of the libraries. If a NatNet project was utilizing NatNetLibStatic.lib to accomplish static linking, you will need to make changes to the project configurations, so that it links dynamically instead.

This is only an example. Required configuration changes may be different depending on how the projects were setup.

Visual Studio Example

Project Settings → Configuration Properties → C/C++ → Preprocessor Definitions: Add "NATNATLIB_IMPORTS"

Project Settings → Configuration Properties → Linker → Input → Additional Dependencies: Change "NatNetLibStatic.lib" to "NatNetLib.lib"

Project Settings → Configuration Properties → Linker → General: Make sure the additional library directories includes the directory where the library files are locate.

In NatNet 3.0, the structure of Rigid Body descriptions and Rigid Body frame data has been slightly modified. The sRigidBodyData:Markers member has been removed, and instead, the Rigid Body description (sRigidBodyDescription) now includes the expected Rigid Body marker positions in respect to the corresponding RB orientation axis.

Per-frame positions of Rigid Body markers have to be derived using the Rigid Body tracking data and the expected Rigid Body markers positions included in the description packet.

SDK/API Support Disclaimer

We provide developer tools to enable OptiTrack customers across a broad set of applications to utilize their systems in the ways that best suit them. Our Motive API through the NatNet SDK and Camera SDK is designed to enable experienced software developers to integrate data transfer and/or system operation with their preferred systems and pipelines. Sample projects are provided alongside each tool, and we strongly recommend the users to reference or use the samples as reliable starting points. The following list specifies the range of support that will be provided for the SDK tools:

Using the SDK tools requires background knowledge on software development; therefore, we do not provide support for basic project setup, compiling, and linking when using the SDK/API to create your own applications.

Although we ensure the SDK tools and their libraries work as intended, we do not provide support for custom developed applications that have been programmed or modified by users using the SDK tools.

Ticketed support will be provided for licensed Motive users using the Motive API and/or the NatNet SDK tools from the included libraries and sample source codes only.

The Camera SDK is a free product, and therefore we do not provide free ticketed support for it.

For other questions, please check out the NaturalPoint forums. Very often, similar development issues get reported and solved there.

This guide provides detailed instructions on commonly used functions of the Motive API for developing custom applications. For a full list of the functions, refer to the Motive API: Function Reference page. Also, for a sample use case of the API functions, please check out the provided marker project. In this guide, the following topics will be covered:

Library files and header files

Initialization and shutdown

Capture setup (Calibration)

Configuring camera settings

Updating captured frames

3D marker tracking

Rigid body tracking

Data streaming

When developing a Motive API project, make sure its linker knows where to find the required library files. This can be done either by specifying its location within the project or by copying these files onto the project folder.

MotiveAPI.h

Motive API libraries (.lib and .dll) are located in the lib folder within the Motive install directory; which is located at C:\Program Files\OptiTrack\Motive\lib by default. In this folder, library files for both 64-bit (MotiveAPI.dll and MotiveAPI.lib) platforms can be found. When using the API library, all of the required DLL files must be located in the executable directory. Copy and paste the MotiveAPI.dll file onto the folder alongside the executable file.

Third-party Libraries

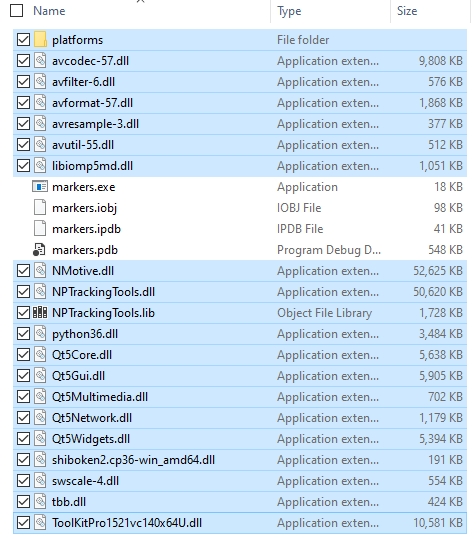

Additional third-party libraries are required for Motive API, and most of the DLL files for these libraries can be found in the Motive install directory C:\Program Files\OptiTrack\Motive\. You can simply copy and paste all of the DLL files from the Motive installation directory into the directory of the Motive API project to use them. Highlighted items in the below image are the required DLL files.

Lastly, copy the C:\Program Files\OptiTrack\Motive\plugins\platforms folder and its contents into the EXE as well since the libraries contained in this folder will also be used.

For function declarations, there are two required header files: MotiveAPI.h and RigidBodySettings.h, and these files are located in the C:\Program Files\OptiTrack\Motive\inc\ folder. Always include the directive syntax for adding the MotiveAPI.h header file for all programs that are developed against the Motive API. The syntax for including RigidBodySetting.h file is already included in the MotiveAPI.h file, so there is no need to include this separately.

The MotiveAPI.h file contains the declaration for most of the functions and classes in the API.

The RigidBodySettings.h file contains declaration for the cRigidBodySettings class, which is used for configuring Rigid Body asset properties.

Note: You could define these directories by using the MOTIVEAPI_INC, MOTIVEAPI_LIB environment variables. Check the project properties (Visual Studio) of the provided marker project for a sample project configuration.

Motive API, by default, loads the default calibration (CAL) and Application profile (MOTIVE) files from the program data directory unless separately specified. These are the files that Motive also loads at the application startup, and they are located in the following folder:

Default System Calibration: C:\ProgramData\OptiTrack\Motive\System Calibration.cal

Default Application Profile: C:\ProgramData\OptiTrack\MotiveProfile.motive

If there are specific files that need to be loaded into the project, you can export and import two files from Motive: motive application profile (MOTIVE) and camera calibration (CAL). The application profile is imported in order to obtain software settings and trackable asset definitions. Only after the camera calibration is imported, reliable 3D tracking data can be obtained. Application profiles can be loaded using the TT_LoadProfile function, and the calibration files can be loaded using the TT_LoadCalibration function.

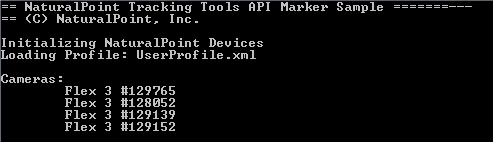

When using the API, connected devices and the Motive API library need to be properly initialized at the beginning of a program and closed down at the end. The following section covers Motive API functions for initializing and closing down devices.

To initialize all of the connected cameras, call the TT_Initialize function. This function initializes the API library and gets the cameras ready for capturing data, so always call this function at the beginning of a program. If you attempt to use the API functions without the initialization, you will get an error.

Motive Profile Load

Please note that TT_Initialization loads the default Motive profiles (MOTIVE) from the ProgramData directory during the initialization process. To load Motive profile, or settings, from a specified directory, use TT_LoadProfile.

The TT_Update function is primarily used for updating captured frames, which will be covered later, but there is another use. The TT_Update can also be called to update a list of connected devices, and you can call this function after the initialization to make sure all of the newly connected devices are properly initialized in the beginning.

When exiting out of a program, make sure to call the TT_Shutdown function to completely release and close down all of the connected devices. Cameras may fail to shut down completely when this function is not called.

The Motive application profile (MOTIVE) stores all the trackable assets involved in a capture and software configurations including application settings and data streaming settings. When using the API, it is strongly recommended to first configure all of the settings and define trackable assets in Motive, export a profile MOTIVE file, and then load the file by calling the TT_LoadProfile function. This way, you can adjust the settings for your need in advance and apply them to your program without worrying about configuring individual settings.

Cameras must be calibrated in order to track in 3D space. However, since camera calibration is a complex process, and because of this, it's easier to have the camera system calibrated from Motive, export the camera calibration file (CAL), and the exported file can be loaded into custom applications that are developed against the API. Once the calibration data is loaded, the 3D tracking functions can be used. For detailed instructions on camera calibration in Motive, please read through the Calibration page.

Loading Calibration

Open Motive.

[Motive] Calibrate: Calibrate camera system using the Calibration panel. Read through the Calibration page for details.

[Motive] Export: After the system has been calibrated, export the calibration file (CAL) from Motive.

Close out of Motive.

[API] Load: Import calibration onto your custom application by calling the TT_LoadCalibration function to import CAL files.

When successfully loaded, you will be able to obtain 3D tracking data using the API functions.

Note:

Calibration Files: When using the exported calibration files, make sure to use only valid calibration. Exported calibration file can be used again as long as the system setup remains unchanged. Note that the file will no longer be valid if any of the system setups have been altered after the calibration. Also, calibration quality may degrade over time due to environmental factors. For these reasons, we recommend re-calibrating the system routinely to guarantee the best tracking quality.

Tracking Bars: If you are using a tracking bar, camera calibration is not required for tracking 3D points.

Connected cameras are accessible by index numbers. The camera indexes are assigned in the order the cameras are initialized. Most of the API functions for controlling cameras require an index value. When processing all of the cameras, use the TT_CameraCount function to obtain the total camera count and process each camera within a loop. For pointing to a specific camera, you can use the TT_CameraID or TT_CameraName functions to check and use the camera with given its index value. This section covers Motive API functions for checking and configuring camera frame rate, camera video type, camera exposure, pixel brightness threshold, and IR illumination intensity.

The following functions return integer values for the configured settings of a camera specified by its index number. Camera video type is returned as an integer value that represents a image processing mode, as listed in the NPVIDEOTYPE.

These camera settings are equivalent to the settings that are listed in the Devices pane of Motive. For more information on each of the camera settings, refer to the Devices pane page.

Now that we have covered functions for obtaining configured settings, now let's modify some of them. There are two main functions for adjusting the camera settings: TT_SetCameraSettings and TT_SetCameraFramerate. The TT_SetCameraSettings function configures video type, exposure, threshold, and intensity settings of a camera which is specified by its index number. The TT_SetCameraFrameRate is used for configuring frame rate of a camera. Supported frame rate range may vary for different camera models. Check the device specifications and apply the frame rates only within the supported range.

If you wish to keep part of the current camera settings, you can use the above functions to obtain the configured settings (e.g. TT_CameraVideoType, TT_CameraFrameRate, TT_CameraExposure, etc.) and use them as input arguments for the TT_SetCameraSettings function. The following example demonstrates modifying frame rate and IR illumination intensity for all of the cameras, while keeping the other settings constant.

Camera Settings

Valid frame rate values: Varies depending on camera models, refer to the respective hardware specifications.

Valid exposure values: Depends on camera model and frame rate settings.

Valid threshold values: 0 - 255

Valid intensity values: 0 - 15

Video Types

Video Type: see the Data Recording page for more information on image processing modes.

Segment Mode: 0

Grayscale Mode: 1

Object Mode: 2

Precision Mode: 4

MJPEG Mode: 6

There are other camera settings, such as imager gain, that can be configured using the Motive API. Please refer to the Motive API: Function Reference page for descriptions on other functions.

In order to process multiple consecutive frames, you must update the camera frames using the following API functions: TT_Update or TT_UpdateSingleFrame. Call one of the two functions repeatedly within a loop to process all of the incoming frames. In the 'marker sample', TT_Update function is called within a while loop as the frameCounter variable is incremented, as shown in the example below.

There are two functions for updating the camera frames: TT_Update and TT_UpdateSingleFrame. At the most fundamental level, these two functions both update the incoming camera frames. However, they may act differently in certain situations. When a client application stalls momentarily, it could get behind on updating the frames and the unprocessed frames may be accumulated. In this situation, each of these two functions will behave differently.

The TT_Update() function will disregard accumulated frames and service only the most recent frame data, but it also means that the client application will not be processing the previously missed frames.

The TT_UpdateSingleFrame() function ensures that only one frame is processed each time the function is called. However, when there are significant stalls in the program, using this function may result in accumulated processing latency.

In general, a user should always use TT_Update(). Only in the case where a user wants to ensure their client application has access to every frame of tracking data and they are having problems calling TT_Update() in a timely fashion, should they consider using TT_UpdateSingleFrame(). If it is important for your program to obtain and process every single frame, use the TT_UpdateSingleFrame() function for updating the data.

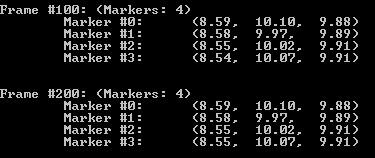

After loading valid camera calibration, you can use the API functions to track retroreflective markers and get their 3D coordinates. The following section demonstrates using the API functions for obtaining the 3D coordinates. Since marker data is obtained for each frame, always call the TT_Update, or the TT_UpdateSingleFrame, function each time newly captured frames are received.

Marker Index

In a given frame, each reconstructed marker is assigned a marker index number. These marker indexes are used for pointing to a particular reconstruction within a frame. You can also use the TT_FrameMarkerCount function to obtain the total marker count and use this value within a loop to process all of the reconstructed markers. Marker index values may vary between different frames, but unique identifiers will always remain the same. Use the TT_FrameMarkerLabel function to obtain the individual marker labels if you wish to access same reconstructions for multiple frames.

Marker Position

For obtaining 3D position of a reconstructed marker, you can use TT_FrameMarkerX, TT_FrameMarkerY, and TT_FrameMarkerZ functions. These functions return 3D coordinates (X/Y/Z) of a marker in respect to the global coordinate system, which was defined during the calibration process. You can further analyze 3D movements directly from the reconstructed 3D marker positions, or you can create a Rigid Body asset from a set of tracked reconstructions for 6 Degrees of Freedom tracking data. Rigid body tracking via the API will be explained in the later section.

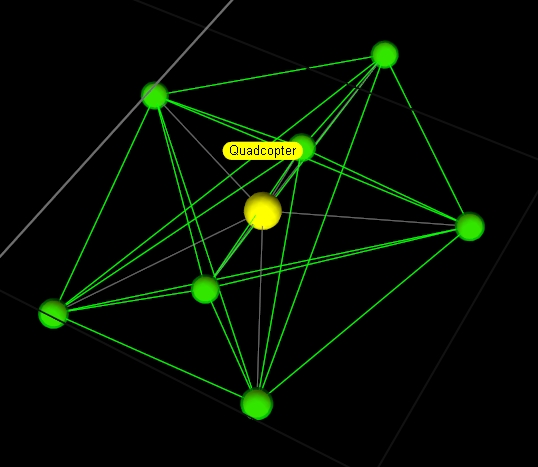

For tracking 6 degrees of freedom (DoF) movement of a Rigid Body, a corresponding Rigid Body (RB) asset must be defined. A RB asset is created from a set of reflective markers attached to a rigid object which is assumed to be undeformable. There are two main approaches for obtaining RB assets when using Motive API; you can either import existing Rigid Body data or you can define new Rigid Bodies using the TT_CreateRigidBody function. Once RB assets are defined in the project, Rigid Body tracking functions can be used to obtain the 6 DoF tracking data. This section covers sample instructions for tracking Rigid Bodies using the Motive API.

We strongly recommend reading through the Rigid Body Tracking page for more information on how Rigid Body assets are defined in Motive.

Let's go through importing RB assets into a client application using the API. In Motive, Rigid Body assets can be created from three or more reconstructed markers, and all of the created assets can be exported out into either application profile (MOTIVE) or a Motive Rigid Body file (TRA). Each Rigid Body asset saves marker arrangements when it was first created. As long as the marker locations remain the same, you can use saved asset definitions for tracking respective objects.

Exporting all RB assets from Motive:

Exporting application profile: File → Save Profile

Exporting Rigid Body file (TRA): File → Export Rigid Bodies (TRA)

Exporting individual RB asset:

Exporting Rigid Body file (TRA): Under the Assets pane, right-click on a RB asset and click Export Rigid Body

When using the API, you can load exported assets by calling the TT_LoadProfile function for application profiles and the TT_LoadRigidBodies or TT_AddRigidBodes function for TRA files. When importing TRA files, the TT_LoadRigidBodies function will entirely replace the existing Rigid Bodies with the list of assets from the loaded TRA file. On the other hand, TT_AddRigidBodes will add the loaded assets onto the existing list while keeping the existing assets. Once Rigid Body assets are imported into the application, the API functions can be used to configure and access the Rigid Body assets.

Rigid body assets can also be defined directly using the API. The TT_CreateRigidBody function defines a new Rigid Body from given 3D coordinates. This function takes in an array float values which represent x/y/z coordinates or multiple markers in respect to Rigid Body pivot point. The float array for multiple markers should be listed as following: {x1, y1, z1, x2, y2, z2, …, xN, yN, zN}. You can manually enter the coordinate values or use the TT_FrameMarkerX, TT_FrameMarkerY, and TT_FrameMarkerZ functions to input 3D coordinates of tracked markers.

When using the TT_FrameMarkerX/Y/Z functions, you need to keep in mind that these locations are taken in respect to the RB pivot point. To set the pivot point at the center of created Rigid Body, you will need to first compute pivot point location, and subtract its coordinates from the 3D coordinates of the markers obtained by the TT_FrameMarkerX/Y/Z functions. This process is shown in the following example.

Example: Creating RB Assets

6 DoF Rigid Body tracking data can be obtained using the TT_RigidBodyLocation function. Using this function, you can save 3D position and orientation of a Rigid Body into declared variables. The saved position values indicate location of the Rigid Body pivot point, and they are represented in respect to the global coordinate axis. The Orientation is saved in both Euler and Quaternion orientation representations.

Example: RB Tracking Data

In Motive, Rigid Body assets have Rigid Body properties assigned to each of them. Depending on how these properties are configured, display and tracking behavior of corresponding Rigid Bodies may vary. When using the API, Rigid Body properties are configured and applied using the cRigidBodySettings class which is declared within the RigidBodySetting.h header file.

Within your program, create an instance of cRigidBodySettings class and call the API functions to obtain and adjust Rigid Body properties. Once desired changes are made, use the TT_SetRigidBodySettings function to assign the properties back onto a Rigid Body asset.

For detailed information on individual Rigid Body settings, read through the Properties: Rigid Body page.

Once the API has been successfully initialized, data streaming can be enabled, or disabled, by calling either the TT_StreamNP, TT_StreamTrackd, or TT_StreamVRPN function. The TT_StreamNP function enables/disables data streaming via the NatNet. The NatNet SDK is a client/server networking SDK designed for sending and receiving NaturalPoint data across networks, and tracking data from the API can be streamed to client applications from various platforms via the NatNet protocol. Once the data streaming is enabled, connect the NatNet client application to the server IP address to start receiving the data.

The TT_StreamNP function is equivalent to Broadcast Frame Data from the Data Streaming pane in Motive.

The Motive API does not currently support configuring data streaming settings directly from the API. To configure the streaming server IP address and the data streaming settings, you will need to use Motive and save an application profile MOTIVE file that contains the desired configuration. Then, the exported profile can be loaded when using the API. Through this way, you will be able to set the interface IP address and decide which data to be streamed over the network.

For more information on data streaming settings, read through the Data Streaming page.

An overview of the NatNet SDK.

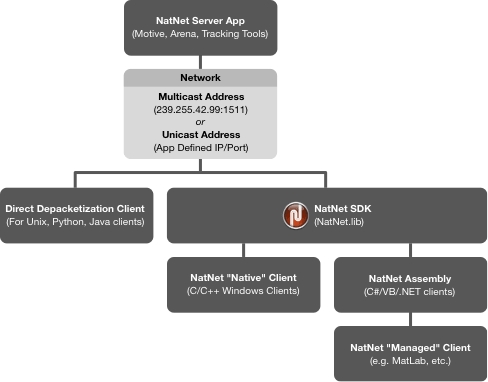

The NatNet SDK is a networking software development kit (SDK) for receiving OptiTrack data across networks. It allows streaming of live or recorded motion capture data from a tracking server (e.g. Motive) into various client applications. Using the SDK, you can develop custom client applications that receive data packets containing real-time tracking information and send remote commands to the connected server. NatNet uses the UDP protocol in conjunction with either Point-To-Point Unicast or IP Multicasting for sending and receiving data. The following diagram outlines the major components of a typical NatNet network setup and how they establish communication between NatNet server and client application.

For previous versions of NatNet, please refer to the provided PDF user guide that ships with the SDK.

Please read through the changelog for key changes in this version.

NatNet is backwards compatible with any version of Motive, however, older versions may be missing features that are present in newer versions.

The NatNet SDK consists of the following:

NatNet Library: Native C++ networking library contents, including the static library file (.lib), the dynamic library file (.dll), and the corresponding header files.

NatNet Assembly: Managed .NET assembly (NatNetML.dll) for use in .NET compatible clients.

NatNet Samples: Sample projects and compiled executables designed to be quickly integrated into your code.

A NatNet server (e.g. Motive) has 2 threads and 2 sockets: one for sending tracking data to a client and one for sending/receiving commands.

NatNet servers and clients can exist either on a same machine or on separate machines.

Multiple NatNet clients can connect to a single NatNet server.

When a NatNet server is configured to use IP Multicast, the data is broadcasted only once, to the Multicast group.

Default multicast IP address: 239.255.42.99 and Port: 1511.

IP address for unicast is defined by a server application.

The NatNet SDK is shipped in a compressed ZIP file format. Within the unzipped NatNet SDK directory, the following contents are included:

Sample Projects: NatNet SDK\Samples

The Sample folder, contains Visual Studio 2013 projects that use the NatNetSDK libraries for various applications. These samples are the quickest path towards getting NatNet data into your application. We strongly recommend taking a close look into these samples and adapt applicable codes into your application. More information on these samples are covered in the NatNet Samples page.

Library Header Files: NatNet SDK\include

The include folder contains headers files for using the NatNet SDK library.

\include\NatNetTypes.h

NatNetTypes.h header file contains the type declaration for all of the data formats that are communicated via the NatNet protocol.

\include\NatNetClient.h

\include\NatNetRequests.h

\include\NatNetRepeater.h

NatNetRepeater.h header file controls how big the packet sizes can be.

\include\NatNetCAPI.h

NatNetCAPI.h header file contains declaration for the NatNet API helper functions. These functions are featured for use with native client applications only.

Library DLL Files: NatNet SDK\lib

NatNet library files are contained in the lib folder. When running applications that are developed against the NatNet SDK library, corresponding DLL files must be placed alongside the executables.

\lib\x64

This folder contains NatNet SDK library files for 64-bit architecture.

\lib\x64\NatNetLib.dll

Native NatNet library for 64-bit platform architecture. These libraries are used for working with NatNet native clients.

\lib\x64\NatNetML.dll

Managed NatNet assembly files for 64-bit platform architecture. These libraries are used for working with NatNet managed clients, including applications that use .NET assemblies.

Note that this assembly is derived from the native library, and to use the NatNetML.dll, NatNetLib.dll must be linked as well.

\lib\x64\NatNetML.xml

Includes XML documentations for use with the NatNetML.dll assembly. Place this alongside the DLL file to view the assembly reference.

\lib\x86

No longer supported in 4.0

\lib\x86\NatNetLib.dll

No longer supported in 4.0.

\lib\x86\NatNetML.dll

No longer supported in 4.0.

\lib\x86\NatNetML.xml

No longer supported in 4.0.

NatNet class and function references for the NatNetClient object.

List of tracking data types available in the NatNet SDK streaming protocol.

NatNet commands for remote triggering the server application

NatNet commands for subscribing to specific data types only.

Tip: Code samples are the quickest path towards getting familiar with the NatNet SDK. Please check out the NatNet samples page.

List of NatNet sample projects and the instructions.

Timecode representation in OptiTrack systems and NatNet SDK tools.

A general guideline to using the NatNet SDK for developing a native client application.

A general guideline to using the NatNet SDK for developing a managed client application.

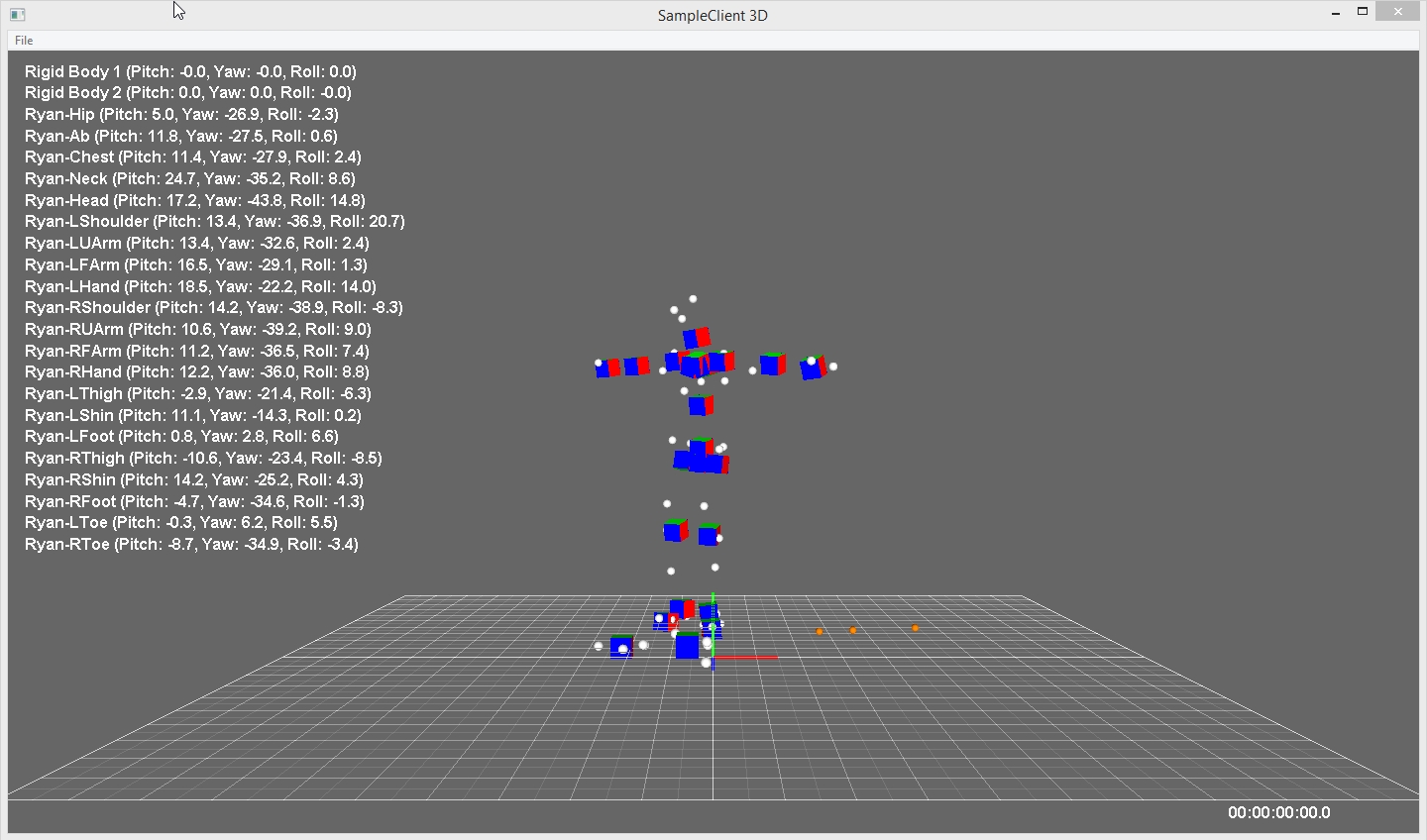

In streamed NatNet data packets, orientation data is represented in the quaternion format (qx, qy, qz, qw). In contrast to Euler angles, Quaternion orientation convention is order independent, however, it indicates the handedness. When converting quaternion orientation into Euler angles, it is important to consider and decide which coordinate convention that you want to convert into. Some of the provided NatNet samples demonstrate quaternion to Euler conversion routines. Please refer to the included WinFormSample, SampleClient3D, or Matlab samples for specific implementation details and usage examples.

To convert from provided quaternion orientation representation, the following aspects of desired Euler angle convention must be accounted:

Rotation Order

Handedness: Left handed or Right handed

Axes: Static (Global) or relative (local) axes.

For example, Motive uses the following convention to display the Euler orientation of an object:

Rotation Order: X (Pitch), Y (Yaw), Z (Roll)

Handedness: Right-handed (RHS)

Axes: Relative Axes (aka 'local')

Important Note: Use of the direct depacketization is not recommended. The syntax of the bit-stream packets is subject to change, requiring an application to update its parsing routines to be compatible with the new format. The direct depacketization approach should be used only where the use of the NatNet library is not applicable.

In situations where the use of the NatNet library is not applicable (e.g. developing on unsupported platforms such as Unix), you can also depacketize the streamed data directly from the raw bit-stream without using the NatNet library. In order to provide the most current bitstream syntax, the NatNet SDK includes a testable working depacketization sample (PacketClient, PythonClient) that decodes NatNet Packets directly without using the NatNet client class.

For the most up-to-date syntax, please refer to either the PacketClient sample or the PythonClient sample to use them as a template for depacketizing NatNet data packets.

Adapt the PacketClient sample (PacketClient.cpp) or the PythonClient sample (PythonSample.py) to your application's code.

Regularly update your code with each revision to the NatNet bitstream syntax.

When working in Edit mode, pause playback in Motive to view the streamed data. Press the h key to display the NatNet help screen for additional commands.

The 4.0 update includes bit-stream syntax changes to allow up to 32 force plates to be streamed at once. This requires corresponding updates for each program that uses the direct depacketization approach for parsing streamed data. A system under 32 force plates should still avoid using direct depacketization. See the Important Note above in the Direct Depacketization section for more information.

Starting from Motive 3.0, you can send NatNet remote commands to Motive and select the version of bitstream syntax to be outputted from Motive. This is accomplished by sending a command through the command port. For details on doing this, please refer to the SetNatNetVersion function demonstrated in the PacketClient.

Bit-Stream NatNet Versions

NatNet 4.1 (Motive 3.1)

NatNet 4.0 (Motive 3.0)

NatNet 3.1 (Motive 2.1)

NatNet 3.0 (Motive 2.0)

NatNet 2.10 (Motive 1.10)

NatNet 2.9 (Motive 1.9)

here are three OptiTrack developer tools for developing custom applications: the Camera SDK, the NatNet SDK, and the Motive API. All of the tools support a C/C++ interface to OptiTrack cameras and provides control over OptiTrack motion capture systems.

Visit our website to compare OptiTrack developer tools and their functions.

SDK/API Support Disclaimer

We provide developer tools to enable OptiTrack customers across a broad set of applications to utilize their systems in the ways that best suit them. Our Motive API through the NatNet SDK and Camera SDK is designed to enable experienced software developers to integrate data transfer and/or system operation with their preferred systems and pipelines. Sample projects are provided alongside each tool, and we strongly recommend the users to reference or use the samples as reliable starting points. The following list specifies the range of support that will be provided for the SDK tools:

Using the SDK tools requires background knowledge on software development; therefore, we do not provide support for basic project setup, compiling, and linking when using the SDK/API to create your own applications.

Although we ensure the SDK tools and their libraries work as intended, we do not provide support for custom developed applications that have been programmed or modified by users using the SDK tools.

Ticketed support will be provided for licensed Motive users using the Motive API and/or the NatNet SDK tools from the included libraries and sample source codes only.

The Camera SDK is a free product, and therefore we do not provide free ticketed support for it.

For other questions, please check out the NaturalPoint forums. Very often, similar development issues get reported and solved there.

Go to the Camera SDK page: Camera SDK

The Camera SDK provides hardware (cameras and hubs) controls and access to the most fundamental frame data, such as grayscale images and 2D object information, from each camera. Using the Camera SDK, you can develop your own image processing applications that utilize the capabilities of the OptiTrack cameras. The Camera SDK is a free tool that can be downloaded from our website.

Note: 3D tracking features are not directly supported with Camera SDK, but they are featured via the Motive API. For more information on the Camera SDK, visit our website.

Go to the Motive API page: Motive API

The Motive API allows control of, and access to, the backend software platform of Motive. Not only does it allow access to 2D camera images and the object data, but it also gives control over the 3D data processing pipeline, including solvers for the assets. Using the Motive API, you can employ the features of Motive into your custom application.

Note: When you install Motive, all of the required components for utilizing the API will be installed within the Motive install directory.

Go to the NatNet SDK page: NatNet SDK 4.0

The NatNet SDK is a client/server networking SDK designed for sending and receiving NaturalPoint data across networks. The NatNet SDK makes the motion capture data available to other applications in real-time. It utilizes UDP along with either Unicast or Multicast communication for integrating and streaming 3D reconstructed data, Rigid Body data, and Skeleton data from OptiTrack systems. Using the NatNet SDK, you can develop custom client/server applications that utilize motion capture data. The NatNet SDK is a free tool that can be downloaded from our website.

Visit our website or Data Streaming page for more information on NatNet SDK.

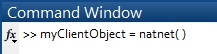

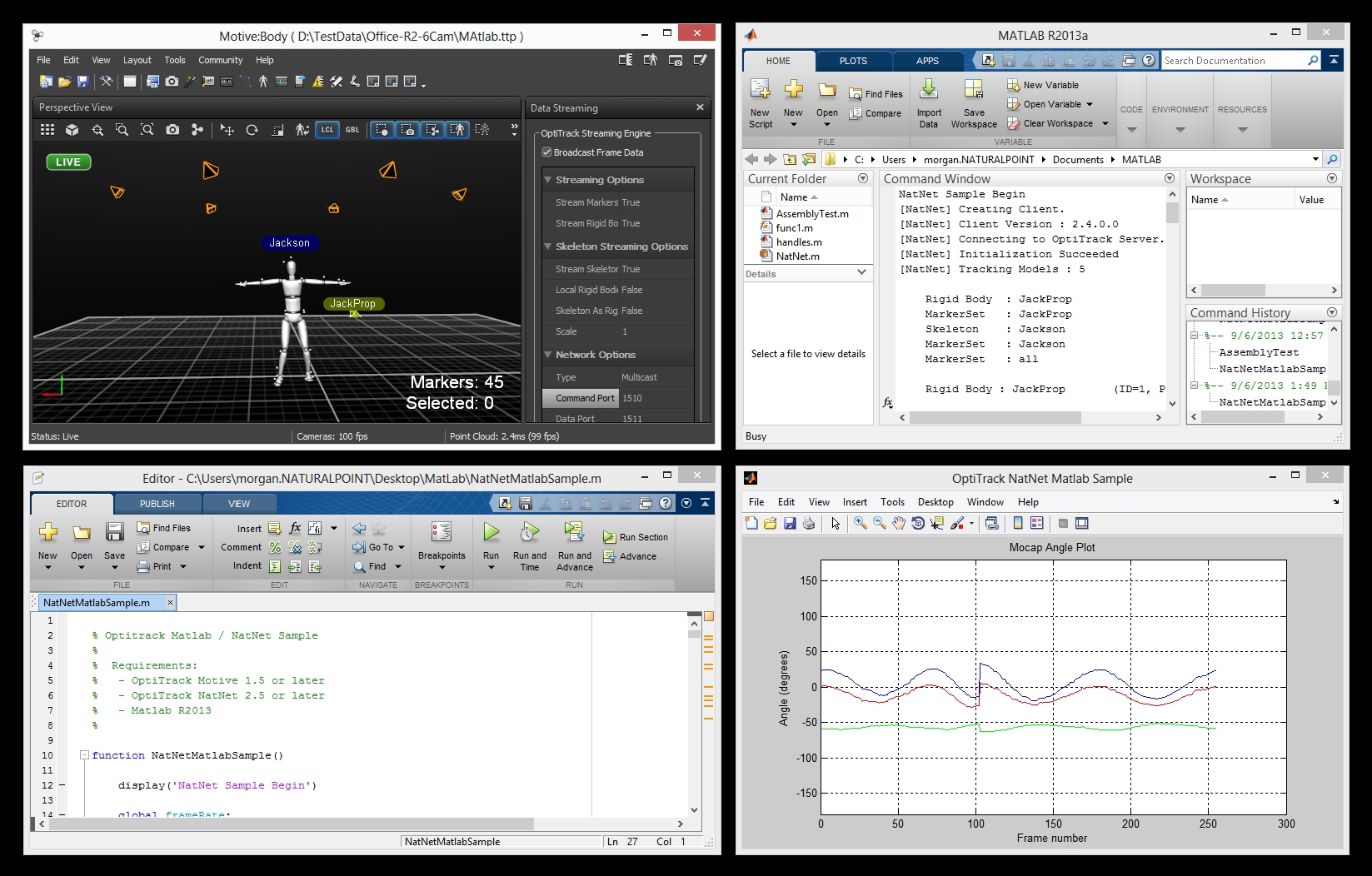

To ease your use of NatNet data in MATLAB applications, we provide a wrapper class (natnet.p) for using real-time streamed NatNet data. Using this class, you can easily connect/disconnect to the server, receive the tracking data, and parse each component.

The Matlab-NatNet wrapper class is a wrapper for the NatNet assembly and provides a simplified interface for managing the native members in MATLAB. The class definition and supporting code should be placed within the MATLAB PATH. The implementation automatically disposes running connections when ending a streaming session, along with basic object management. In order to use the Matlab wrapper class, the NatNetML assembly must be loaded into the MATLAB session. This is handled automatically and the first time the class is used the user is prompted to find the NatNetML.dll file in the Windows file browser. A reference to this location is used in future MATLAB sessions.

To create an instance of the natnet wrapper class, simply call the class with no input arguments and store it in a variable.

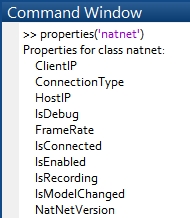

Class Properties: The available properties to the class can be seen with the following command, properties('natnet').

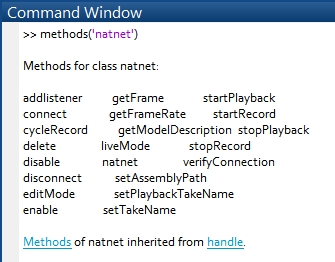

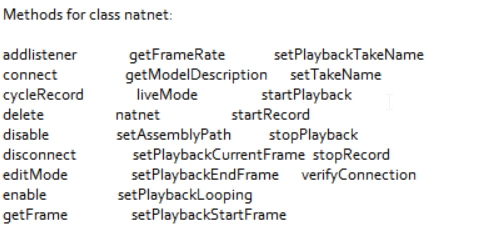

Class Methods: And Available methods

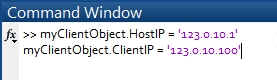

Creating an instance of the class does not automatically connect the object to a host application. After enabling the broadcast frame data under the Data Streaming pane in Motive or in any other server, configure the connection type and IP addresses for the client and host to reflect your network setup.

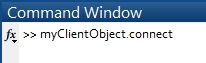

Then enter the following line to call the connect method for connecting to the natnet object to the host.

When creating a natnet class instance, the default host and client IP address is set to '127.0.0.1', which is the local loopback address of the computer. The natnet object will fail to connect if the network address of the host or client is incorrect.

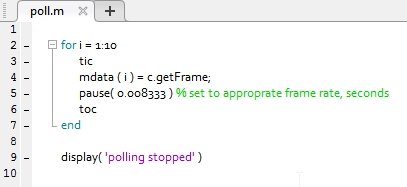

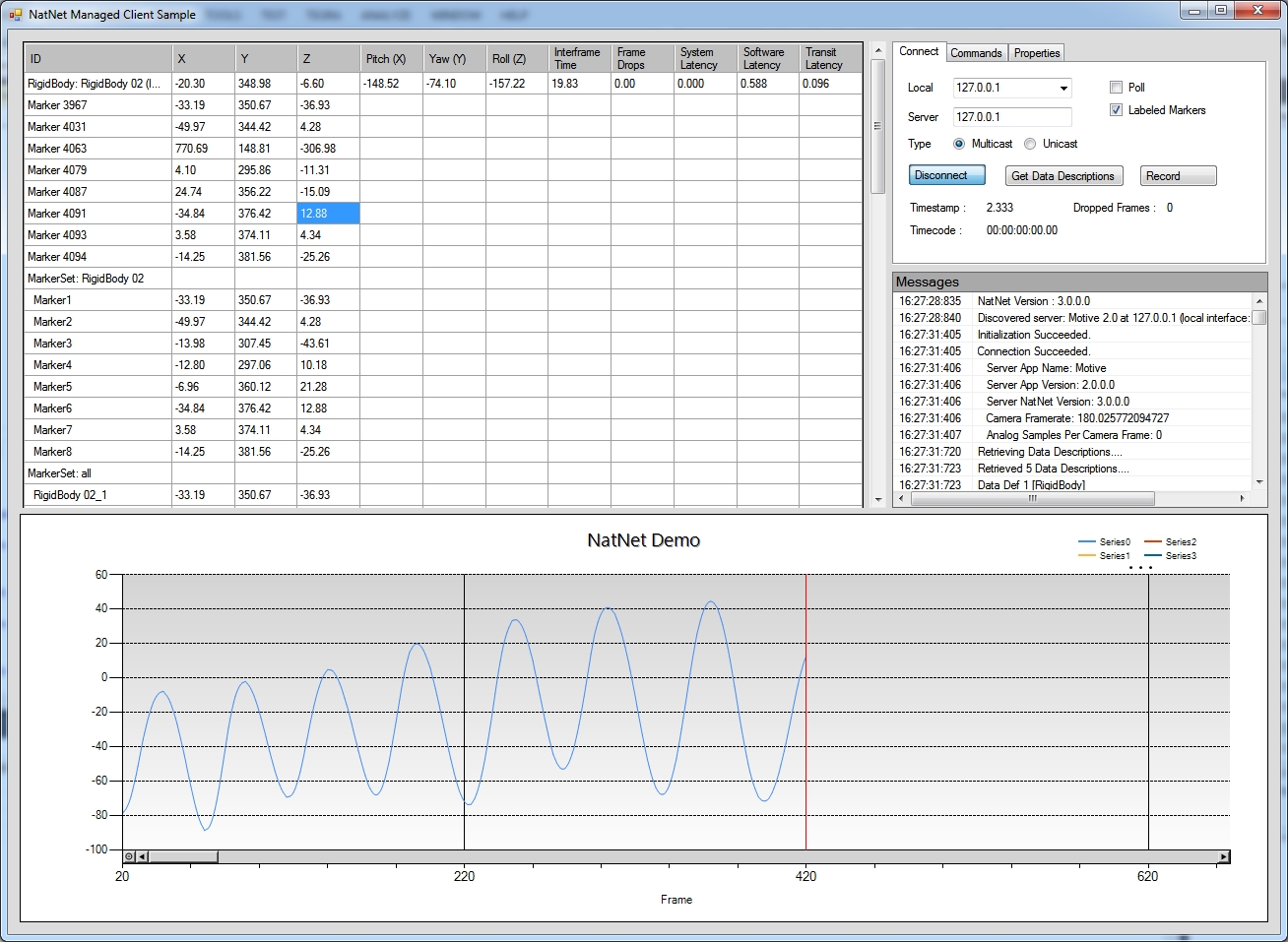

The natnet wrapper class interface has a method to poll mocap data called getFrame. getFrame method returns the data structure of the streamed data packet. Polling is supported but not recommended due to accessing errors. The function, poll.m, provides a simple example showing out to poll the frames of mocap data. After connecting the natnet object to the host server, run the polling script to acquire the data packets in the main workspace.

The natnet class implements a simple interface to use event callbacks. The natnet method, addlistener, requires two input arguments. The first input is which listener slot to use, and the second is the name of the m-function file to be attached to the listener. Once the function is attached using addlistener method, it will be called each time a frame is received. When the callback function is first created, the listener is turned off by default. This is to ensure the user had control of the execution of the even callback function.

Enabling Listener: Start receiving streamed data by enabling the callback function by calling the enable method. The input of the enable method indicates the index value of the listener to enable. Multiple functions can be attached to the listener, and you can enable a specific listener by inputting its index value. Entering 0 will enable all listeners.

Disabling Listener: There are three types of callback functions that ships with the natnet class. IF they are added to the natnet listener list and enabled, they will execute each time the host sends a frame of data. The setup.m file, contains an example of how to operate the class. To stop streaming, use the disable method and be sure to enter a value of 0 to disable all listeners.

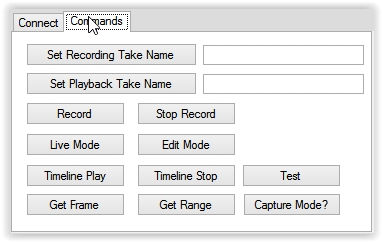

The natnet class also has functionality to control the Motive application. To enable recording use the startRcord and stopRecord methods, and for playback, use the startPlayback and stopPlayback methods. There are a number of additional commands as shown below.

To display the actions of the class, set the IsReporting property to true. This displays operations of the class to the Command Window.

An overview of the general data structure used in the NatNet software development kit (SDK) and how the library is used to parse received tracking information.

For specific details on each of the data types, please refer to the header file.

When receiving streamed data using the NatNet SDK library, its data descriptions should be received before receiving the tracking data.

NatNet data is packaged mainly into two different formats: data descriptions and frame-specific tracking data. Utilizing this format, the client application can discover which data are streamed out from the server application prior to accessing the actual tracking data.

For every asset (e.g., reconstructed markers, Rigid Bodies, Skeletons, force plates) included within streamed capture sessions, the description and tracking data are stored separately. This format allows frame-independent parameters (e.g., name, size, and number) to be stored within instances of the description structs, and frame-dependent values (e.g. position and orientation) to be stored within instances of the frame data structs. When needed, two different packets of an asset can be correlated by referencing its unique identifier values.

Dataset Descriptions contains descriptions of the motion capture data sets for which a frame of motion capture data will be generated. (e.g. sSkeletonDescription, sRigidBodyDescription)

Frame of Mocap Data contains a single frame of motion capture data for all the datasets described from the Dataset Descriptions. (e.g. sSkeletonData, sRigidBodyData)

When streaming from Motive, received NatNet data will contain only the assets that are enabled in the and the asset types that are set to true under Streaming Settings in the tab in Motive Settings.

To receive data descriptions from a connected server, use the method. Calling this function saves a list of available descriptions in an instance of sDataSetDescriptions.

The sDataSetDescriptions structure stores an array of multiple descriptions for each asset (Marker Sets, RigidBodies, Skeletons, and Force Plates) involved in a capture and necessary information can be parsed from it.

Refer to the header file for more information on each data type and members of each description struct.

The following section lists the main data description structs that are available through the SDK.

Saved struct Type: Native Library: sServerDescription

Saved struct Type: Managed Assembly: ServerDescription

Contains basic network information of the connected server application and the host computer that it is running on. Server descriptions are obtained by calling the GetServerDescription method from the NatNetClient class.

Host connection status

Host information (computer name, IP, server app name)

NatNet version

Host's high resolution clock frequency. Used for calculating the latency

Connection status

Saved struct Type: Native Library: sDataDescriptions

Saved struct Type: Managed Assembly: List<DataDescriptor>

Contains an array of data descriptions for each active asset in a capture, and basic information about corresponding asset is stored in each description packet. Data descriptions are obtained by calling the GetDataDescriptions method from the NatNetClient class. Descriptions of each asset type is explained below.

Saved struct Type: Native Library: sMarkerSetDescription

Saved struct Type: Managed Assembly: MarkerSet

Marker Set description contains a total number of markers in a Marker Set and each of their labels. Note that Rigid Body and Skeleton assets are included in the Marker Set as well. Also, for every mocap session, there is a special MarkerSet named all, which contains a list of all of the labeled markers from the capture.

Name of the Marker Set

Number of markers in the set

Marker names

Saved struct Type: Native Library: sRigidBodyDescription

Saved struct Type: Managed Assembly: RigidBody

Rigid Body description contains corresponding Rigid Body names. Skeleton bones are also considered as Rigid Bodies, and in this case, the description also contains hierarchical relationship for parent/child Rigid Bodies.

Rigid Body name

Rigid Body streaming ID

Rigid Body parent ID (when streaming Skeleton as Rigid Bodies)

Offset displacement from the parent Rigid Body

Array of marker locations that represent the expected marker locations of the Rigid Body asset.

Saved struct Type: Native Library: sSkeletonDescription

Saved struct Type: Managed Assembly: Skeleton

Skeleton description contains corresponding Skeleton asset name, Skeleton ID, and total number of Rigid Bodies (bones) involved in the asset. The Skeleton description also contains an array of Rigid Body descriptions which relates to individual bones of the corresponding Skeleton.

Name of the Skeleton

Skeleton ID: Unique identifier

Number of Rigid Bodies (bones)

Array of bone descriptions

Note: Beginning with NatNet 3.0, Skeleton bone data description packet changed from left-handed convention to right-handed convention to be consistent with the convention used in all other data packets. For older versions of NatNet clients, the server, Motive, will detect the client version and stream out Skeleton data in the matching convention. This change will only affect direct depacketization clients as well as clients that have the NatNet library upgraded to 3.0 from previous versions; for those clients, corresponding changes must be made to work with Motive 2.0.

Saved struct Type: Native Library: sAssetDescription

Saved struct Type: Managed Assembly: Asset

Asset description contains corresponding data for trained markerset assets:

Asset type - Trained Markerset

Asset name

Asset ID: Unique identifier

Number of markers

Number of Rigid Bodies (bones) in the asset

The following asset-specific arrays are also included:

Rigid Body (bone) descriptions

Marker descriptions

Saved struct Type: Native Library: sForcePlateDescription

Saved struct Type: Managed Assembly: ForcePlate

Force plate ID and serial number

Force plate dimensions

Electrical offset

Number of channels

Channel info

More. See NatNetTypes.h file for more information

Saved struct Type: Native Library: sCameraDescription

Saved struct Type: Managed Assembly: Camera

An instance of the sCameraDescription contains information regarding the camera name, its position, and orientation.

Camera Name (can be used with Get/Set property commands)

Camera Position (x, y, z float variables)

Camera Orientation (qx, qy, qz, qw float variables)

For more info, see the NatnetTypes.h file.

Saved struct Type: Native Library: sDeviceDescription

Saved struct Type: Managed Assembly: Device

Device ID. Used only for identification of devices in the stream.

Device Name

Device serial number

Device Type

Channel count

Channel Names

As mentioned in the beginning, frame-specific tracking data are stored separately from the DataDescription instances as this cannot be known ahead of time or out of band but only by per frame basis. These data get saved into instances of sFrameOfMocapData for corresponding frames, and they will contain arrays of frame-specific data structs (e.g.sRigidBodyData, sSkeletonData) for each types of assets included in the capture. Respective frame number, timecode, and streaming latency values are also saved in these packets.

FrameOfMocapData

Refer to the NatNetTypes.h header file or the NatNetML.dll assembly for the most up to date descriptions of the types.

Most of the NatNet SDK data packets contain ID values. This value is assigned uniquely to individual markers as well as each of assets within a capture. These values can be used to figure out which asset a given data packet is associated with. One common use is for correlating data descriptions and frame data packets of an asset.

Decoding Member IDs

For each member object that is included within a parental model, its unique ID value points to both its parental model and the member itself. Thus, the ID value of a member object needs to be decoded in order to parse which objects and the parent models they are referencing to.

For example, a Skeleton asset is a hierarchical collection of bone Rigid Bodies, and each of its bone Rigid Bodies has unique ID that references to the involved Skeleton model and the Rigid Body itself. When analyzing Skeleton bones, its ID value needs to be decoded in order to extract the segment Rigid Body ID, and only then, it can be used to reference its descriptions.

The following guide references SampleClientML.cs client application that is provided with the SDK. This sample demonstrates the use of .NET NatNet assembly for connecting to a NatNet server, receiving a data stream, and parsing and printing out the received data.

SDK/API Support Disclaimer

We provide developer tools to enable OptiTrack customers across a broad set of applications to utilize their systems in the ways that best suit them. Our Motive API through the NatNet SDK and Camera SDK is designed to enable experienced software developers to integrate data transfer and/or system operation with their preferred systems and pipelines. Sample projects are provided alongside each tool, and we strongly recommend the users to reference or use the samples as reliable starting points. The following list specifies the range of support that will be provided for the SDK tools:

Using the SDK tools requires background knowledge on software development; therefore, we do not provide support for basic project setup, compiling, and linking when using the SDK/API to create your own applications.

Although we ensure the SDK tools and their libraries work as intended, we do not provide support for custom developed applications that have been programmed or modified by users using the SDK tools.

Ticketed support will be provided for licensed Motive users using the Motive API and/or the NatNet SDK tools from the included libraries and sample source codes only.

The Camera SDK is a free product, and therefore we do not provide free ticketed support for it.

For other questions, please check out the . Very often, similar development issues get reported and solved there.

When developing a managed client applications, you will need to link both native and managed DLL files(NatNetLib.dll and NatNetML.dll). The managed NatNet assembly is derived from the native library, so without the NatNetLib.dll, NatNetML.dll will not be imported properly. These library files can be found in the NatNetSDK\lib folder for 32-bit platform and in the NatNetSDK\lib\x64 folder for 64-bit platform. Make sure these DLL files are properly linked and placed alongside the executables.

Also, when using the NatNetML assembly, place the NatNetML.xml file alongside imported DLL file. This allows XML documentation to be included as a reference. These library files can be found in the NatNetSDK\lib folder for 32-bit platform and in the NatNetSDK\lib\x64 folder for 64-bit platform. Make sure these DLL files are properly linked and placed alongside the executables.

Tracking server and client network is established through an instance of NatNet client object (NatNetML.NatNetClientML). Also, this NatNetClientML object will be used for receiving tracking data and sending NatNet commands to and from the server application. When instantiating the NatNetClientML object, input an integer value for determining the desired type of UDP connection; whether it connects via multicast (0) or unicast (1).

Server Discovery

You can also use the NatNetServerDiscover class to auto-detect available servers to connect to. This is demonstrated in the WinFromSamplesApp**.**

GetDataDescriptions method in the NatNetClientML class queries a list of DataDescriptors from the connected server and saves it in a declared list of NatNetML.DataDescriptions. In the SampleClientML sample, the following lines are executed to accomplish this:

After obtaining a list of data descriptions, use the saved DataDescriptor objects to access and output data descriptions as needed. In many cases, it is better to re-organize and save the received descriptor objects into separate lists, or into hashtables, of corresponding data types, so that they can be referenced later in the program.

The best way to receive tracking data without losing any of its frames is to create a callback handler function for processing the data. The OnFrameReady event type from the client object can be used to declare a callback event, and the linked function gets called each time a frame is received from the server. Setting up a frame handler function will ensure that every frame gets processed promptly. However, these handler functions should return as quickly as possible to prevent accumulation of frames due to processing latency within the handler.

OnFrameReady2: Alternate function signatured frame ready callback handler for .NET applications/hosts that don't support the OnFrameReady event type defined above (e.g. MATLAB)

Calling the GetLastFrameOfData method returns a FrameOfMocapData of the most recent frame that was streamed out from the connected server application. This approach is should only be used for .NET applications/hosts that do not support the OnFrameReady callback handler function.

This function is supported in NatNetML only. Native implementations should always use the callback handlers.

When exiting the program, call Uninitialize method using the connected client object and disconnect the client application from the server.

This document provides general guidelines to create a device plugin for external glove devices in Motive.

Starting with Motive 3.1, an example project, GloveDeviceExample, is included along with the peripheral API library. This guide will reference that example and provide basic information on how to use the peripheralimport library (LIB) to create glove device plugins and identify required configuration settings.

This guide assumes that you have access to Motive 3.1 or above. Additional descriptions are commented throughout the source and header files also.

Mocap systems often incorporate external measurement systems in order to accomplish more complex analysis. Commonly integrated devices include force plates, data acquisition boards, and EMG sensors.

There are a few different ways to combine data with external systems: recorded data sets can be analyzed in post-capture; data can be streamed real-time into a client application and then combined downstream; or data can be combined live in Motive using the plugin interface. With finger-tracking devices, the workflow is much simpler if the motion capture data of the two systems gets joined together in Motive.

In this article, we will show how to integrate glove devices using the plugin interface provided by the (peripheralimport.lib), which is used to create and manage plugin devices in Motive.

Glove devices update the local quaternion rotation of the finger bones. The hand skeleton in Motive is made up of 15 bone joints, three bones per finger. Each bone will require 4 float values to represent the quaternion rotation. In total, 60 float analog channels will be created for the glove device.

The hand orientation in Motive respects the right-handed coordinate system. For the left hand, the local coordinate is +X pointing towards the fingertips when in T-pose, and for the right hand, +X points towards the wrist/body when in T-pose.

The glove device example is a guide for integrating glove devices into Motive. This project is located in:

[Motive Installation Directory]\PeripheralAPI\example\GloveDeviceExample

Parts of the GloveDeviceExample code can be replaced with calls to the SDK in order to initialize, connect, receive, and map the glove data into Motive. These are annotated with placeholder comments throughout the source files. The glove device example project includes some of the base classes that can be inherited:

GloveDeviceFactoryBase class, which handles instantiation and initialization of glove device in Motive.

GloveDeviceBase class, which extends the cPluginDevice class of the peripheral API, and abstracts out the required configurations for glove devices.

ExampleGloveDevice and ExampleGloveAdapterSingleton are sample implementations of a glove plugin by inheriting the above base classes.

HardwareSimulator class is a dummy device to simulate callbacks for the device connection and device data update.

To create a glove SDK, this example can be used as a template and the callbacks can be replaced with the SDK functions that will connect and report glove devices and their data. The following table describes the source code in more detail.

There are a few requirements from the glove SDK side:

Data callback that reports data from all connected gloves.

Reports device Information, including number of connected devices, serial numbers, battery levels, and signal strengths.

Remote host connection.

Proper error handling for the SDK calls.

The plugin starts at DLLEnumerateDeviceFactories method in dllmain.cpp. This entry method calls ExampleGlove_EnumerateDeviceFactories static method to instantiate the ExampleGloveAdapter class, which starts the DoDetectionThread, which periodically attempts to connect to the glove host. Once it’s connected, it registers the SDK callbacks.

The detection thread in the file ExampleGloveAdapterSingleton.cpp includes a loop that attempts to connect to the host at the given IP address defined under General Settings -> Advanced -> Glove Server Address. The SDK instance can be instantiated either at the constructor of the adapter class or before the while loop. Within the while loop, attempts to connect to the host by calling the ConnectToHost function below.

When the ConnectToHost call successfully connects to the glove server, required callbacks can be registered:

The process of creating a device involves two steps:

Instantiate the device factory that owns the plugin device.

Transfer the ownership of the device factory to Motive to call the Create method.

In the GloveDeviceExample project, this occurs in dllmain.cpp and in ExampleGloveAdapterSingleton.cpp (ExampleGloveAdapterSingleton::CreateNewGloveDevice).

Once an instance of the device factory is transferred, Motive will call the Create method when it’s ready to create the device. The following script is in the file ExampleGloveDevice.cpp:

The ExampleGloveDeviceFactory class overrides the Create method, which is used to create the instance of glove device class (ExampleGloveDevice), and configures it, which includes setting common glove properties and the data channels that are required for that device. At last, it returns the pointer to the device back to Motive. These configurations are common to all glove devices, so these two methods are implemented at the glove device base (GloveDeviceBase.h).

GloveDeviceBase.cpp:

Here, it’s important to define the common glove device properties at the device factory level. More specifically, the device type and device order must specify that this is a glove device because these properties will be referenced by Motive.

The provided example uses data maps in the ExampleGloveAdapter that the registered callbacks keep updated. More specifically, these maps contain device information and tracking data for all devices that the SDK reports. Ideally, the glove SDK should report all glove data at the same time in one packet. From ExampleGloveAdapterSingleton.h:

Each glove device runs its own collection thread, ExampleGloveDevice::DoCollectionThread(), which updates the device information such as signal and battery levels, and also populates its analog channels for the tracking data.

The NatNet SDK features sending remote commands/requests from a client application over to a connected server application (i.e. Motive).

The SendMessageAndWait method under NatNetClient class is the core method for sending remote commands. This function takes in a string value of the command and sends it over to the connected Motive server each time it's called, and once the server receives the remote command, corresponding actions will be performed. Please note that only a selected set of commands can be understood by the server, which are listed under the chart below.

NatNet commands are sent via the UDP connection, 1510 port by default.

For a sample use of NatNet commands, refer to the provided .

Description

Sends a NatNet command to the NatNet server and waits for a response.

Input Parameters:

szRequest: NatNet command string, which is one of the commands listed on the below chart. If the command requires input parameters, corresponding parameters should be included in the command with comma delimiters. (e.g. string strCommand = "SetPlaybackTakeName," + TakeName;).

tries: Number of attempts to send the command. Default: 10.

timeout: Number of milliseconds to wait for a response from the server before the call times out. Default: 20.

ppServerResponse: Server response for the remote command. The response format depends on which command is sent out.

pResponseSize: Number of bytes in response

Returns:

ErrorCode, On success, it returns 0 or ErrorCode_OK.

Motive Supported NatNet Commands/Requests

Supported for Motive 3.0 or above.

Following is a general format used for the subscription command strings: