Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

This page is for the general specifications of the Prime Color camera. For details on how to setup and use the Prime color, please refer to the Prime Color Setup page in this user guide.

Captured tracking data can be exported into a Track Row Column (TRC) file, which is a format used in various mocap applications. Exported TRC files can also be accessed from spreadsheet software (e.g. Excel). These files contain raw output data from capture, which include positional data of each labeled and unlabeled marker from a selected Take. Expected marker locations and segment orientation data are not be included in the exported files. The header contains basic information such as file name, frame rate, time, number of frames, and corresponding marker labels. Corresponding XYZ data is displayed in the remaining rows of the file.

A list of the default rigid body creation properties is listed under the Rigid Bodies tab. Thes properties are applied to only rigid bodies that are newly created after the properties have been modified. For descriptions of the rigid body properties, please read through the Properties: Rigid Body page.

Note that this is the default creation properties. Asset specific rigid body properties are modified directly from the Properties pane.

Cameras

This page includes all of the Motive tutorial video for visual learners.

Updated videos coming soon!

The API reports "world-space" values for markers and rigid body objects at each frame. It is often desirable to convert the coordinates of points reported by the API from the world-space (or global) coordinates into the local space of the rigid body. This is useful, for example, if you have a rigid body that defines the world space that you want to track markers within.

Rotation values are reported as both quaternions, and as roll, pitch, and yaw angles (in degrees). Quaternions are a four-dimensional rotation representation that provide greater mathematical robustness by avoiding "gimbal" points that may be encountered when using roll, pitch, and yaw (also known as Euler angles). However, quaternions are also more mathematically complex and are more difficult to visualize, which is why many still prefer to use Euler angles.

There are many potential combinations of Euler angles so it is important to understand the order in which rotations are applied, the handedness of the coordinate system, and the axis (positive or negative) that each rotation is applied about.

These are the conventions used in the API for Euler angles:

Rotation order: XYZ

All coordinates are *right-handed*

Pitch is degrees about the X axis

Yaw is degrees about the Y axis

Roll is degrees about the Z axis

Position values are in millimeters

To create a transform matrix that converts from world coordinates into the local coordinate system of your chosen rigid body, you will first want to compose the local-to-world transform matrix of the rigid body, then invert it to create a world-to-local transform matrix.

To compose the rigid body local-to-world transform matrix from values reported by the API, you can first compose a rotation matrix from the quaternion rotation value or from the yaw, pitch, and roll angles, then inject the rigid body translation values. Transform matrices can be defined as either "column-major" or "row-major". In a column-major transform matrix, the translation values appear in the right-most column of the 4x4 transform matrix. For purposes of this article, column-major transform matrices will be used. It is beyond the scope of this article, but it is just as feasible to use row-major matrices by transposing matrices.

In general, given a world transform matrix of the form: M = [ [ ] Tx ] [ [ R ] Ty ] [ [ ] Tz ] [ 0 0 0 1 ]

where Tx, Tz, Tz are the world-space position of the origin (of the rigid body, as reported from the API), and R is a 3x3 rotation matrix composed as: R = [ Rx (Pitch) ] * [ Ry (Yaw) ] * [ Rz (Roll) ]

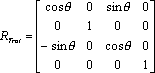

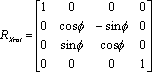

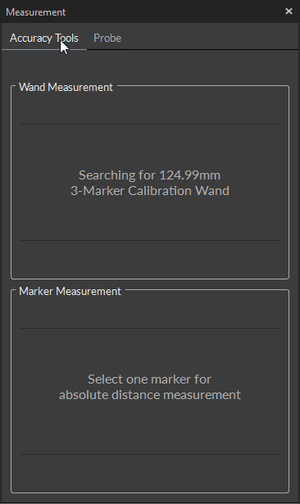

where Rx, Ry, and Rz are 3x3 rotation matrices composed according to:

A handy trick to know about local-to-world transform matrices is that once the matrix is composed, it can be validated by examining each column in the matrix. The first three rows of Column 1 are the (normalized) XYZ direction vector of the world-space X axis, column 2 holds the Y axis, and column 3 is the Z axis. Column 4, as noted previously, is the location of the world-space origin. To convert a point from world coordinates (coordinates reported by the API for a 3D point anywhere in space), you need a matrix that converts from world space to local space. We have a local-to-world matrix (where the local coordinates are defined as the coordinate system of the rigid body used to compose the transform matrix), so inverting that matrix will yield a world-to-local transformation matrix. Inversion of a general 4x4 matrix can be slightly complex and may result in singularities, however we are dealing with a special transform matrix that only contains rotations and a translation. Because of that, we can take advantage of the method shown here to easily invert the matrix:

Once the world matrix is converted, multiplying it by the coordinates of a world-space point will yield a point in the local space of the rigid body. Any number of points can be multiplied by this inverted matrix to transform them from world (API) coordinates to local (rigid body) coordinates.

The API includes a sample (markers.sln/markers.cpp) that demonstrates this exact usage.

A list of the default Skeleton display properties for newly created skeletons is listed under the Skeletons tab. These properties are applied to only skeleton assets that are newly created after the properties have been modified. For descriptions of the skeleton properties, please read through the Properties: Skeleton page.

Note that this is the default creation properties. Asset-specific skeleton properties are modified directly from the .

Skeleton Creation Pose

Chooses which Skeleton calibration pose to be used for creation. (T-pose, A-pose Palms Downward, A-pose Palms Forward, and A-pose Elbows Bent)

Head Upright

Creates the skeleton with heads upright irrespective of head marker locations.

Straight Arms

Creates the skeleton with arms straight even when arm markers are not straight.

Straight Legs

Creates the skeleton with straight knee joints even when leg markers are not straight.

Feet On Floor

Creates the skeleton with feet planted on the ground level.

Height Marker

Force the solver so that the height of the created skeleton aligns with the top head marker.

The Info pane in Motive displays real-time tracking information of a rigid body selected in Motive. This pane can be accessed under the View tab in Motive or by clicking icon on the main toolbar. This pane lists out real-time tracking information for a selected rigid body in Motive. Reported data includes a total number of tracked rigid body markers, mean errors for each of them, and the 6 Degree of Freedom (position and orientation) tracking data for the rigid body.

Euler Angles

There are many potential combinations of Euler angles so it is important to understand the order in which rotations are applied, the handedness of the coordinate system, and the axis (positive or negative) that each rotation is applied about. The following conventions are used for representing Euler orientation in Motive:

Rotation order: XYZ

All coordinates are *right-handed*

Pitch is degrees about the X axis

This wiki contains instructions on operating OptiTrack motion capture systems. If you are new to the system, start with the to begin your capture experience.

You can navigate through pages using links in the sidebar or using links included within the pages. You can also use the search bar provided on the top-right corner to search for page names and keywords that you are looking for. If you have any questions that are not documented in this wiki or from other provided documentation, please check our or contact our for further assistance.

OptiTrack website:

The OptiTrack Duo/Trio tracking bars are factory calibrated and there is no need to calibrate the cameras to use the system. By default, the tracking volume is set at the center origin of the cameras and the axis are oriented so that Z-axis is forward, Y-axis is up, X-axis is left.

Roll is degrees about the Z axis

Position values are in millimeters

The Helpdesk: http://help.naturalpoint.com

NaturalPoint Forums: https://forums.naturalpoint.com

When using the Duo/Trio tracking bars, you can set the coordinate origin at the desired location and orientation using either a Rigid Body or a calibration square as a reference point. Using a calibration square will allow you to set the origin more accurately. You can also use a custom calibration square to set this.

Adjustig the Coordinate System Steps

First set place the calibration square at the desired origin. If you are using a Rigid Body, its pivot point position and orientation will be used as the reference.

[Motive] Open the Calibration pane.

[Motive] Open the Ground Planes page.

[Motive] Select the type of calibration square that will be used as a reference to set the global origin. Set it to Auto if you are using a calibration square from us. If you are using a Rigid Body, select the Rigid Body option from the drop-down menu. If you are using a , you will need to set the vertical offset also.

[Motive] Select the Calibration square markers or the Rigid Body markers from the

[Motive] Click Set Set Ground Plane button, and the global origin will be adjusted.

If you wish to change the location and orientation of the global axis, you can use the Coordinate Systems Tool which can be found under the Tools tab.

When using the Duo/Trio tracking bars, you can set the coordinate origin at desired location and orientation using a calibration square. Make sure the calibration square is oriented properly.

Adjusting the Coordinate System Steps

First set place the calibration square at the desired origin.

[Motive] Open the Coordinate System Tools pane under the Tools tab.

[Motive] Select the Calibration square markers from the Perspective View pane

[Motive] Click the Set Ground Plane button from the Coordinate System Tools pane, and the global origin will be adjusted.

PrimeX 41, PrimeX 22, Prime 41*, and Prime 17W* camera models have powerful tracking capability that allows tracking outdoors. With strong infrared (IR) LED illuminations and some adjustments to its settings, a Prime system can overcome sunlight interference and perform 3D capture. This page provides general hardware and software system setup recommendations for outdoor captures.

Please note that when capturing outdoors, the cameras will have shorter tracking ranges compared to when tracking indoors. Also, the system calibration will be more susceptible to change in outdoor applications because there are environmental variables (e.g. sunlight, wind, etc.) that could alter the system setup. To ensure tracking accuracy, routinely re-calibrate the cameras throughout the capture session.

Even though it is possible to capture under the influence of the sun, it is best to pick cloudy days for captures in order to obtain the best tracking results. The reasons include the following:

Bright illumination from the daylight will introduce extraneous reconstructions, requiring additional effort in the post-processing on cleaning up the captured data.

Throughout the day, the position of the sun will continuously change as will the reflections and shadows of the nearby objects. For this reason, the camera system needs to be routinely re-masked or re-calibrated.

The surroundings can also work to your advantage or disadvantage depending on the situation. Different outdoor objects reflect 850 nm Infrared (IR) light in different ways that can be unpredictable without testing. Lining your background with objects that are black in Infrared (IR) will help distinguish your markers from the background better which will help with tracking. Some examples of outdoor objects and their relative brightness is as follows:

Grass typically appears as bright white in IR.

Asphalt typically appears dark black in IR.

Concrete depends, but it's usually a gray in IR.

1. [Camera Setup]

In general, setting up a truss system for mounting the cameras is recommended for stability, but for outdoor captures, it could be too much effort to do so. For this reason, most outdoor capture applications use tripods for mounting the cameras.

2. [Camera Setup]

Do not aim the cameras directly towards the sun. If possible, place and aim the cameras so that they are capturing the target volume at a downward angle from above.

3. [Camera Setup]

Increase the f-stop setting in the Prime cameras to decrease the aperture size of the lenses. The f-stop setting determines the amount of light that is let through the lenses, and increasing the f-stop value will decrease the overall brightness of the captured image allowing the system to better accommodate for sunlight interference. Furthermore, changing this allows camera exposures to be set to a higher value, which will be discussed in the later section. Note that f-stop can be adjusted only in PrimeX 41, PrimeX 22, Prime 41*, and Prime 17W* camera models.

4. [Camera Setup] Utilize shadows

Even though it is possible to capture under sunlight, the best tracking result is achieved when the capture environment is best optimized for tracking. Whenever applicable, utilize shaded areas in order to minimize the interference by sunlight.

1. [Camera Settings]

Increase the LED setting on the camera system to its maximum so that IR LED illuminates at its maximum strength. Strong IR illumination will allow the cameras to better differentiate the emitted IR reflections from ambient sunlight.

2. [Camera Settings]

In general, increasing camera exposure makes the overall image brighter, but it also allows the IR LEDs to light up and remain at its maximum brightness for a longer period of time on each frame. This way, the IR illumination is stronger on the cameras, and the imager can more easily detect the marker reflections in the IR spectrum.

When used in combination with the increased f-stop on the lens, this adjustment will give a better distinction of IR reflections. Note that this setup applies only for outdoor applications, for indoor applications, the exposure setting is generally used to control overall brightness of the image.

*Legacy camera models

Before setting up a motion capture system, choose a suitable setup area and prepare it in order to achieve the best tracking performance. This page highlights some of the considerations to make when preparing the setup area for general tracking applications. Note that this page provides just general recommendations and these could vary depending on the size of a system or purpose of the capture.

First of all, pick a place to set up the capture volume.

Setup Area Size

System setup area depends on the size of the mocap system and how the cameras are positioned. To get a general idea, check out the feature on our website.

Make sure there is plenty of room for setting up the cameras. It is usually beneficial to have extra space in case the system setup needs to be altered. Also, pick an area where there is enough vertical spacing as well. Setting up the cameras at a high elevation is beneficial because it gives wider lines of sight for the cameras, providing a better coverage of the capture volume.

Minimal Foot Traffic

After camera system calibration, the system should remain unaltered in order to maintain the calibration quality. Physical contacts on cameras could change the setup, requiring it to be re-calibrated. To prevent such cases, pick a space where there is only minimal foot traffic.

Flooring

Avoid reflective flooring. The IR lights from the cameras could be reflected by it and interfere with tracking. If this is inevitable, consider covering the floor with surface mats to prevent the reflections.

Avoid flexible or deformable flooring; such flooring can negatively impact your system's calibration.

For the best tracking performance, minimize ambient light interference within the setup area. The motion capture cameras track the markers by detecting reflected infrared light and any extraneous IR lights that exist within the capture volume could interfere with the tracking.

Sunlight: Block any open windows that might let sunlight in. Sunlight contains wavelength within the IR spectrum and could interfere with the cameras.

IR Light sources: Remove any unnecessary lights in IR wavelength range from the capture volume. IR lights could be emitted from sources such as incandescent, halogen, and high-pressure sodium lights or any other IR based devices.

All cameras are equipped with IR filters, so extraneous lights outside of the infrared spectrum (e.g. fluorescent lights) will not interfere with the cameras. IR lights that cannot be removed or blocked from the setup area can be masked in Motive using the during the system calibration. However, this feature completely discards image data within the masked regions and an overuse of it could negatively impact tracking. Thus, it is best to physically remove the object whenever possible.

Dark-colored objects absorb most of the visible light, however, it does not mean that they absorb the IR lights as well. Therefore, the color of the material is not a good way of determining whether an object will be visible within the IR spectrum. Some materials will look dark to human eyes but appear bright white on the IR cameras. If these items are placed within the tracking volume, they could introduce extraneous reconstructions.

Since you already have the IR cameras in hand, use one of the cameras to check whether there are IR white materials within the volume. If there are, move them out of the volume or cover them up.

Remove any unnecessary obstacles out of the capture volume since they could block cameras' view and prevent them from tracking the markers. Leave only the items that are necessary for the capture.

Remove reflective objects nearby or within the setup area since IR illumination from the cameras could be reflected by them. You can also use non-reflective tapes to cover the reflective parts.

Prime 41 and Prime 17W cameras are equipped with powerful IR LED rings which enables tracking outdoors, even under the presence of some extraneous IR lights. The strong illumination from the Prime 41 cameras allows a mocap system to better distinguish marker reflections from extraneous illuminations. System settings and camera placements may need to be adjusted for outdoor tracking applications.

Please read through the page for more information.

During Calibration process, a calibration square is used to define global coordinate axes as well as the ground plane for the capture volume. Each calibration square has different vertical offset value. When defining the ground plane, Motive will recognize the square and ask user whether to change the value to the matching offset.

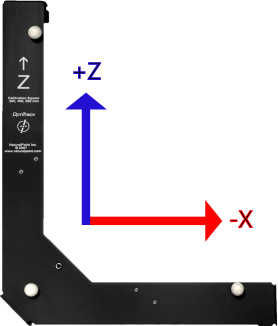

For Motive 1.7 or higher, Right-Handed Coordinate System is used as the standard, across internal and exported formats and data streams. As a result, Motive 1.7 now interprets the L-Frame differently than previous releases:

Motive can export tracking data in BioVision Hierarchy (BVH) file format. Exported BVH files do not include individual marker data. Instead, a selected skeleton is exported using hierarchical segment relationships. In a BVH file, the 3D location of a primary skeleton segment (Hips) is exported, and data on subsequent segments are recorded by using joint angles and segment parameters. Only one skeleton is exported for each BVH file, and it contains the fundamental skeleton definition that is required for characterizing the skeleton in other pipelines.

Notes on relative joint angles generated in Motive: Joint angles generated and exported from Motive are intended for basic visualization purposes only and should not be used for any type of biomechanical or clinical analysis.

General Export Options

BVH Specific Export Options

It is heavily recommended that you use another audio capture software with timecode to capture and synchronize audio data. Audio capture in Motive is for reference only and is not intended to perfectly align to video or motion capture data.

Take scrubbing is not supported to align with audio recorded within Motive. If you would like the audio to be closely in reference to video and motion capture data, you must play the take from the beginning.

Recorded audio files can be played back from a captured Take or be exported into a WAV audio files. This page details how to record and playback audio in Motive. Before using an audio input device (microphone) in Motive, first make sure that the device is properly connected and configured in Windows.

In Motive, audio recording and playback settings can be accessed from the tab → Audio Settings.

In Motive, open the Audio Settings, and check the box next to Enable Capture.

Select the audio input device that you want to use.

Press the Test button to confirm that the input device is properly working.

In Motive, open a Take that includes audio recordings.

To playback recorded audio from a Take, check the box next to Enable Playback.

Select the audio output device that you will be using.

In order to playback audio recordings in Motive, audio format of recorded sounds MUST match closely with the audio format used in the output device. Specifically, communication channels and frequency of the audio must match. Otherwise, recorded sound will not be played back.

The recorded audio format is determined by the format of a recording device that was used when capturing Takes. However, audio formats in the input and output devices may not always agree. In this case, you will need to adjust the input device properties to match the take.

Audio capture within Motive, does not natively synchronize to video or motion capture data and is intended for reference audio only. If you require synchronization, please use an external device and software with timecode. See below for suggestions for .

Device's audio format can be configured under the Sound settings in Windows. In Sound settings (accessed from Control Panel), select the recording device, click on Properties, and the default format can be changed under the Advanced Tab, as shown in the image below.

Recorded audio files can be exported into WAV format. To export, right-click on a Take from the and select Export Audio option in the context menu.

There are a variety of different programs and hardware that specialize in audio capture. A not very exhaustive list of examples can be seen below.

Tentacle Sync TRACK E

Adobe Premiere

Avid Media Composer

In order to capture audio using a different program, you will need to connect both the motion capture system (through the eSync) and the audio capture device to timecode data (and possibly genlock data). You can then use the timecode information to synchronize the two sources of data for your end product.

For more information on synchronizing external devices, read through the page.

The following devices are internally tested and should work for most use cases for reference audio only:

AT2020 USB

MixPre-3 II Digital USB Preamp

The Properties pane can be accessed by clicking on the icon on the toolbar.

The Properties pane lists out the settings configured for selected objects. In Motive, each type of asset has a list of associated properties, and you can access and modify them using the Properties pane. These properties determine how the display and tracking of the corresponding items are done in Motive. This page will go over all of the properties, for each type of asset, that can be viewed or configured in Motive.

Properties will be listed for recorded Takes, Rigid Body assets, Skeleton assets, force plate device, and NI-DAQ device. Detailed descriptions on each corresponding properties are documented on the following pages:

Selected Items

The Properties pane contains advanced settings that are hidden by default. Access these settings by going to the menu on the top-right corner of the pane and clicking Show Advanced and all of the settings, including the advanced settings, will be listed under the pane.

The list of advanced settings can also be customized to show only the settings that are needed specifically for your capture application. To do so, go the pane menu and click Edit Advanced, and uncheck the settings that you wish to be listed in the pane by default. One all desired settings are unchecked, click Done Editing to apply the customized configurations.

In Motive, the Application Settings can be accessed under the View tab or by clicking icon on the main toolbar. Default Application Settings can be recovered by Reset Application Settings under the Edit Tools tab from the main Toolbar.

The Mouse tab under the application settings is where you can check and customize the mouse actions to navigate and control in Motive.

The following table shows the most basic mouse actions:

You can also pick a preset mouse action profiles to use. The presets can be accessed from the below drop-down menu. You can choose from the provided presets, or save out your current configuration into a new profile to use it later.

The Keyboard tab under the application settings allows you to assign specific hotkey actions to make Motive easier to use. List of default key actions can be found in the following page also:

Configured hotkeys can be saved into preset profiles to be used on a different computer or to be imported later when needed. Hotkey presets can be imported or loaded from the drop-down menu:

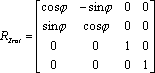

This page provides information on the Probe pane, which can be accessed under the Tools tab or by clicking on the icon from the toolbar.

This section highlights what's in the Probe pane. For detailed instructions on how to use the Probe pane to collect measurement samples, read through Measurement Probe Kit Guide.

The Probe Calibration feature under the Rigid Body edit options can be used to re-calibrate a pivot point of a measurement probe or a custom Rigid Body. This step is also completed as one of the calibration steps when first creating a measurement probe, but you can re-calibrate it under the Modify tab.

In Motive, select the Rigid Body or a measurement probe.

Bring out the probe into the tracking volume where all of its markers are well-tracked.

Place and fit the tip of the probe in one of the slots on the provided calibration block.

The Digitized Points section is used for collecting sample coordinates using the probe. You can select which Rigid Body to use from the drop-down menu and set the number of frames used to collect the sample. Clicking on the Sample button will trigger Motive to collect a sample point and save it into the C:\Users\[Current User]\Documents\OptiTrack\measurements.csv file.

When needed, export the measurements of the accumulated digitized points into a separate CSV file, and/or clear the existing samples to start a new set of measurements

Shows the live X/Y/Z position of the calibrated probe tip.

Shows the live X/Y/Z position of the last sampled point.

Shows the distance between the last point and the live position of the probe tip.

Shows the distance between the last two collected samples.

Shows the angle between the last three collected samples

This page goes over the features available on the Markersets pane. Markersets pane can be accessed by clicking on the icon on the toolbar.

The Marker Set is a type of assets in Motive. It is the most fundamental method of grouping related markers, and this can be used to manually label individual markers in post-processing of captured data using the Labeling pane. Note that Marker Sets are used for manual labeling only. For automatic labeling during live mode, a Rigid Body asset or a Skeleton asset is necessary.

Since creating rigid bodies, or skeletons, groups the markers in each set and automatically labels them, Marker Sets are not commonly used in the processing workflow. However, they are still useful for marker-specific tracking applications or when the marker labeling is done in pipelines other than auto-labeling. Also, marker sets are useful when organizing and reassigning the labels.

To create a Marker Set, click the icon under the and select New Marker Set.

Once a Marker Set asset is created, its list of labels can be managed using the Marker Sets pane. First of all, Marker Set assets must be selected in Motive and the corresponding asset will be listed on the Marker Set pane. Then, new marker labels can be added by clicking the Icon. If you wish to create multiple marker labels at once, they can added by typing in the labels or copying and pasting a list of labels (a carriage-return delimited) from the windows clipboard onto the pane as shown in the image below..(Press Ctrl+V in the Marker List window).

Move to Top

Move the selected label to the top of the labels list.

Move Up

Move the selected label one level higher on the list.

Move Down

Move the selected label one level lower on the list.

Move to Bottom

Move the selected label to the bottom of the labels list.

Rename

Rename the selected label

Delete

Delete the seleted label

The Reference View pane is used to monitor captured videos from the reference cameras. Up to two reference cameras can be monitored on each pane. This pane can be accessed under the View tab → Reference Overlay or simply by clicking on one of the reference view icons from the main toolbar ().

Cameras can be set to a reference view from the Devices pane or by configuring video type to grayscale modes.

In this pane, cameras, markers, and trackable assets can be overlayed over the reference view. This is a good way of monitoring events during the capture. All of the assets and trajectory histories under the Perspective view pane can be overlayed on the reference videos from this pane.

Note: that the overlayed assets will not be rendered into exported reference videos.

In optical motion capture systems, proper camera placement is very important in order to efficiently utilize the captured images from each camera. Before setting up the cameras, it is good idea to plan ahead and create a blueprint of the camera placement layout. This page highlights the key aspects and tips for efficient camera placements.

A well-arranged camera placement can significantly improve the tracking quality. When tracking markers, 3D coordinates are reconstructed from the 2D views seen by each camera in the system. More specifically, correlated 2D marker positions are triangulated to compute the 3D position of each marker. Thus, having multiple distinct vantages on the target volume is beneficial because it allows wider angles for the triangulation algorithm, which in turn improves the tracking quality. Accordingly, an efficient camera arrangement should have cameras distributed appropriately around the capture volume. By doing so, not only the tracking accuracy will be improved, but uncorrelated rays and marker occlusions will also be prevented. Depending on the type of tracking application, capture volume environment, and the size of a mocap system, proper camera placement layouts may vary.

In order to ensure that every camera in a mocap system takes full advantage of its capability, they need to be focused and aimed at the target tracking volume. This page includes detailed instructions on how to adjust the focus and aim of each camera for an optimal motion capture. OptiTrack cameras are focused at infinity by default, which is generally sufficient for common tracking applications. However, we recommend users to always double-check the camera view and make sure the captured images are focused when first setting up the system. Obtaining best quality image is very important as 3D data is derived from the captured images.

This page provides some information on aligning a Rigid Body pivot point with a real object replicated 3D model.

When using streamed Rigid Body data to animate a real-life replicate 3D model, the alignment of the pivot point is necessary. In other words, the location of the Rigid Body pivot coincides with the location of the pivot point in the corresponding 3D model. If they are not aligned accurately, the animated motion will not be in a 1:1 ratio compared to the actual motion. This alignment is commonly needed for real-time VR applications where real-life objects are 3D modeled and animated in the scene. The suggested approaches for aligning these pivot points will be discussed on this page.

This page provides information and instructions on how to utilize the Probe Measurement Kit.

Measurement probe tool utilizes the precise tracking of OptiTrack mocap systems and allows you to measure 3D locations within a capture volume. A probe with an attached Rigid Body is included with the purchased measurement kit. By looking at the markers on the Rigid Body, Motive calculates a precise x-y-z location of the probe tip, and it allows you to collect 3D samples in real-time with sub-millimeter accuracy. For the most precise calculation, a probe calibration process is required. Once the probe is calibrated, it can be used to sample single points or multiple samples to compute distance or the angle between sampled 3D coordinates.

Make sure the device format of the recording device matches the device format that will be used in the playback devices (speakers and headsets). This is very important as the recorded audio would not playback if these formats do not match. Most speakers have at least 2 channels, so an input device with 2 channels should be used for recording.

Capture the Take.

Make sure the configurations in Device Format closely matches the Take Format. This is elaborated further in the section below.

Play the Take.

A list of the default Rigid Body creation properties is listed under the Rigid Bodies tab. These properties are applied to only Rigid Bodies that are newly created after the properties have been modified. For descriptions of the Rigid Body properties, please read through the Properties: Rigid Body page.

Note that this is the default creation properties. Asset specific Rigid Body properties are modified directly from the Properties pane.

You can change the naming convention of Rigid Bodies when they are first created. For instance, if it is set to RigidBody, the first Rigid Body will be named RigidBody when first created. Any subsequent Rigid Bodies will be named RigidBody 001, RigidBody 002, and so on.

User definable ID. When streaming tracking data, this ID can be used as a reference to specific Rigid Body assets.

The minimum number of markers that must be labeled in order for the respective asset to be booted.

The minimum number of markers that must be labeled in order for the respective asset to be tracked.

Applies double exponential smoothing to translation and rotation. Disabled at 0.

Compensate for system latency by predicting movement into the future.

For this feature to work best, smoothing needs to be applied as well.

Toggle 'On' to enable. Displays asset's name over the corresponding skeleton in the 3D viewport.

Select the default color a Rigid Body will have upon creation. Select 'Rainbow' to cycle through a different color each time a new Rigid Body is created.

When enabled this shows a visual trail behind a Rigid Body's pivot point. You can change the History Length, which will determine how long the trail persists before retracting.

Shows a Rigid Body's visual overlay. This is by default Enabled. If disabled, the Rigid Body will only appear as individual markers with the Rigid Body's color and pivot marker.

When enabled for Rigid Bodies, this will display the Rigid Body's pivot point.

Shows the transparent sphere that represents where an asset first searches for markers, i.e. the asset model marker.

When enabled and a valid geometric model is loaded, the model will draw instead of the Rigid Body.

Allows the asset to deform more or less to accommodate markers that don't fix the model. High values will allow assets to fit onto markers that don't match the model as well.

A list of the default Skeleton display properties for newly created Skeletons is listed under the Skeletons tab. These properties are applied to only Skeleton assets that are newly created after the properties have been modified. For descriptions of the Skeleton properties, please read through the Properties: Skeleton page.

Note that this is the default creation properties. Asset-specific Skeleton properties are modified directly from the Properties pane.

Creates the Skeleton with arms straight even when arm markers are not straight.

Creates the Skeleton with straight knee joints even when leg markers are not straight.

Creates the Skeleton with feet planted on the ground level.

Creates the Skeleton with heads upright irrespective of head marker locations.

Force the solver so that the height of the created Skeleton aligns with the top head marker.

Height offset applied to hands to account for markers placed above the write and knuckle joints.

Same as the Rigid Body visuals above:

Label

Creation Color

Bones

Asset Model Markers

Changes the color of the skeleton visual to red when there are no markers contributing to a joint.

Display Coordinate axes of each joint.

Displays the lines between labeled skeleton markers and corresponding expected marker locations.

Displays lines between skeleton markers and their joint locations.

Sets the axis convention on exported data. This can be set to a custom convention, or preset convetions for exporting to Motion Builder or Visual3D/Motion Monitor.

X Axis Y Axis Z Axis

Allows customization of the axis convention in the exported file by determining which positional data to be included in the corresponding data set.

Frame Rate

Number of samples included per every second of exported data.

Start Frame

Start frame of the exported data. You can either set it to the recorded first frame of the exported Take or to the start of the working range, or scope range, as configured under the Control Deck or in the Graph View pane.

End Frame

End frame of the exported data. You can either set it to the recorded end frame of the exported Take or to the end of the working range, or scope range, as configured under the Control Deck of in the Graph View pane.

Scale

Apply scaling to the exported tracking data.

Units

Sets the length units to use for exported data.

Single Joint Torso

When this is set to true, there will be only one skeleton segment for the torso. When set to false, there will be extra joints on the torso, above the hip segment.

Hands Downward

Sets the exported skeleton base pose to use hands facing downward.

MotionBuilder Names

Sets the name of each skeletal segment according to the bone naming convention used in MotionBuilder.

Skeleton Names

Set this to the name of the skeleton to be exported.

Axis Convention

Once it starts collecting the samples, slowly move the probe in a circular pattern while keeping the tip fitted in the slot; making a cone shape overall. Gently rotate the probe to collect additional samples.

When sufficient samples are collected, the mean error of the calibrated pivot point will be displayed.

Click Apply to use the calibrated definition or click Cancel to calibrate again.

An ideal camera placement varies depending on the capture application. In order to figure out the best placements for a specific application, a clear understanding of the fundamentals of optical motion capture is necessary.

To calculate 3D marker locations, tracked markers must be simultaneously captured by at least two synchronized cameras in the system. When not enough cameras are capturing the 2D positions, the 3D marker will not be present in the captured data. As a result, the collected marker trajectory will have gaps, and the accuracy of the capture will be reduced. Furthermore, extra effort and time will be required for post-processing the data. Thus, marker visibility throughout the capture is very important for tracking quality, and cameras need to be capturing at diverse vantages so that marker occlusions are minimized.

Depending on captured motion types and volume settings, the instructions for ideal camera arrangement vary. For applications that require tracking markers at low heights, it would be beneficial to have some cameras placed and aimed at low elevations. For applications tracking markers placed strictly on the front of the subject, cameras on the rear won't see those and as a result, become unnecessary. For large volume setups, installing cameras circumnavigating the volume at the highest elevation will maximize camera coverage and the capture volume size. For captures valuing extreme accuracy, it is better to place cameras close to the object so that cameras capture more pixels per marker and more accurately track small changes in their position.

Again, the optimal camera arrangement depends on the purpose and features of the capture application. Plan the camera placement specific to the capture application so that the capability of the provided system is fully utilized. Please contact us if you need consulting with figuring out the optimal camera arrangement.

For common applications of tracking 3D position and orientation of Skeletons and Rigid Bodies, place the cameras on the periphery of the capture volume. This setup typically maximizes the camera overlap and minimizes wasted camera coverage. General tips include the following:

Mount cameras at the desired maximum height of the capture volume.

Distribute the cameras equidistantly around the setup area.

Adjust angles of cameras and aim them towards the target volume.

For cameras with rectangular FOVs, mount the cameras in landscape orientation. In very small setup areas, cameras can be aimed in portrait orientation to increase vertical coverage, but this typically reduces camera overlap, which can reduce marker continuity and data quality.

TIP: For capture setups involving large camera counts, it is useful to separate the capture volume into two or more sections. This reduces amount of computation load for the software.

Around the volume

For common applications tracking a Skeleton or a Rigid Body to obtain the 6 Degrees of Freedom (x,y,z-position and orientation) data, it is beneficial to arrange the cameras around the periphery of the capture volume for tracking markers both in front and back of the subject.

Camera Elevations

For typical motion capture setup, placing cameras at high elevations is recommended. Doing so maximizes the capture coverage in the volume, and also minimizes the chance of subjects bumping into the truss structure which can degrade calibration. Furthermore, when cameras are placed at low elevations and aimed across from one another, the synchronized IR illuminations from each camera will be detected, and will need to be masked from the 2D view.

However, it can be beneficial to place cameras at varying elevations. Doing so will provide more diverse viewing angles from both high and low elevations and can significantly increase the coverage of the volume. The frequency of marker occlusions will be reduced, and the accuracy of detecting the marker elevations will be improved.

Camera to Camera Distance

Separating every camera by a consistent distance is recommended. When cameras are placed in close vicinity, they capture similar images on the tracked subject, and the extra image will not contribute to preventing occlusions or the reconstruction calculations. This overlap detracts from the benefit of a higher camera count and also doubles the computational load for the calibration process. Moreover, this also increases the chance of marker occlusions because markers will be blocked from multiple views simultaneously whenever obstacles are introduced.

Camera to Object Distance

An ideal distance between a camera and the captured subject also depends on the purpose of the capture. A long distance between the camera and the object gives more camera coverage for larger volume setups. On the other hand, capturing at a short distance will have less camera coverage but the tracking measurements will be more accurate. The cameras lens focus ring may need to be adjusted for close-up tracking applications.

Pick a camera to adjust the aim and focus.

Set the camera to the raw grayscale video mode (in Motive) and increase the camera exposure to capture the brightest image (These steps are accomplished by the Aim Assist Button on featured cameras).

Place one or more reflective markers in the tracking volume.

Carefully adjust the camera angle while monitoring the Camera Preview so that the desired capture volume is included within the camera coverage.

Within the Camera Preview in Motive, zoom in on one of the markers so that it fills the frame.

Adjust the focus (detailed instruction given below) so that the captured image is resolved as clearly as possible.

Repeat above steps for other cameras in the system.

Adjusting aim with a single person can be difficult because the user will have to run back and forth from the camera and the host PC in order to adjust the camera angle and monitor the 2D view at the same time. OptiTrack cameras featuring the Aim Assist button (Prime series and Flex 13) makes this aiming process easier. With just one button-click, the user can set the camera to the grayscale mode and the exposure value to its optimal setting for adjusting both aim and focus. Fit the capture volume within the vertical and horizontal range shown by the virtual crosshairs that appear when Aim Assist mode is on. With this feature, the single-user no longer needs to go back to the host PC to choose cameras and change their settings. Settings for Aim Assist buttons are available from Application Settings pane.

After all the cameras are placed at correct locations, they need to be properly aimed in order to fully utilize its capture coverage. In general, all cameras need to be aimed at the target capture volume where markers will be tracked. While cameras are still attached to the mounting structure, carefully adjust the camera clamp so that the camera field of view (FOV) is directed at the capture region. Refer to 2D camera views from the Camera Preview pane, and ensure that each camera view covers the desired capture region.

All OptiTrack cameras (except the Duo 3 and Trio 3 tracking bars) can be re-focused to optimize image clarity at any distance within the tracking range. Change the camera mode to raw grayscale mode and adjust the camera setting, increase exposure and LED setting, to capture the brightest image. Zoom onto one of the reflective markers in the capture volume and check clarity of the image. Then, adjust the camera focus and find the point where the marker image is best resolved. The following images show some examples.

Auto-zoom using Aim Assist button

Double-click on the aim assist button to have the software automatically zoom into a single marker near the center of the camera view. This makes the focusing process a lot easier to accomplish for a single person.

PrimeX 41 and PrimeX 22

For PrimeX 41 and 22 models, camera focus can be adjusted by rotating the focus ring on the lens body, which can be accessed at the center of the camera. The front ring on the lens changes the focus of the camera, and the rear ring adjusts the F-stop of the lens. In most cases, it is beneficial to set the f-stop low to have the aperture at its maximum size for capturing the brightest image. Carefully rotate the focus ring while monitoring the 2D grayscale camera view for image clarity. Once the focus and f-stop have been optimized on the lens, it should be locked down by tightening the set screw. In default configuration, PrimeX 41 cameras are equipped with 12mm F#1.8 lens, and the PrimeX 22 cameras are equipped with 6.8mm F#1.6 lens.

Prime 17W and 41*

For Prime 17W and 41 models, camera focus can be adjusted by rotating the focus ring on the lens body, which can be accessed at the center of the camera. The front ring on the Prime 41 lens changes the focus of the camera, while the rear ring on the Prime 17W adjusts its focus . Set the aperture at its maximum size in order to capture the brightest image. For the Prime 41, the aperture ring is located at the rear of the lens body, where the Prime 17W aperture ring is located at the front. Carefully rotate the focus ring while monitoring the 2D grayscale camera view for image clarity. Align the mark with the infinity symbol when setting the focus back to infinity. Once the focus has been optimized, it should be locked down by tightening the set screw.

*Legacy camera models

PrimeX 13 and 13W, and Prime 13* and 13W*

PrimeX 13 and PrimeX 13W use M12 lenses and cameras can be focused using custom focus tools to rotate the lens body. Focusing tools can be purchased on , and they clip onto the camera lens and rotates it without opening the camera housing. It could be beneficial to lower the LED illumination to minimize reflections from the adjusting hand.

*Legacy camera models

Slim Series

SlimX 13 cameras also feature M12 lenses. The camera focus can be easily adjusted by rotating the lens without the need to remove the housing. Slim cameras support multiple lens types, including third-party lenses so focus techniques will vary. Refer to the lens type to determine how to proceed. (In general, M12 lenses will be focused by rotating the lens body, while C and CS lenses will be focused by rotating the focus ring).

There are two methods for doing this. Using a measurement probe to sample 3D points to reference from, or simply using a reference grayscale view to align. The first method of creating and using a measurement probe is most accurate and recommended.

Step 1. Create a Rigid Body of the target object

First of all, create a Rigid Body from the markers on the target object. By default, the pivot point of the Rigid Body will be positioned at the geometrical center of the marker placement. Then place the object onto somewhere stable where it will stay stationary.

Step 2. Create a measurement probe.

For instructions on creating a measurement probe, please refer to Measurement Probe page. You can purchase our probe or create your own. All you need is 4 markers with a static relationship to a projected tip.

Step 3. Collect data points to outline the silhouette

Use the created measurement probe to collect sample data points that outlines the silhouette of your object. Mark all of the corners and other key features on the object.

Step 4. Attach 3D model

After 3D data points have been generated using the probe, attach your game geometry (obj file) to the Rigid Body by turning on the Model Replace property and importing the geometry under Attached Geometry property.

From the sampled 3D points, You can also export markers created from the probe to Maya or other content creation packages to generate models guaranteed to scale correctly.

Step 5. Translate the pivot point

Next step is to translate the 3D model so that the attached model aligns with the silhouette sample that we collected in Step 3. The model can be easily translated and rotated using the GIZMO tool. Move, rotate, and scale the asset unit it is aligned with the silhouette.

For accurate alignment, it will be easier to decrease the size of the marker visual. This can be changed from the Marker Diameter setting under the application settings panel.

Step 6. Copy transformation values

After you have translated, rotated, and scaled the pivot point of the Rigid Body to align the attached 3D model with the sampled data points, the transformation values will be shown under the Attached Geometry property.

Copy and paste this transformation parameter onto the Rigid Body location and orientation options under the Edit tab in the Builder pane. This will translate the pivot point of the Rigid Body in Motive, and align it with the pivot point of the 3D model.

Step 7. Zero all transformation values in the Attached Geometry section

Once the Rigid Body pivot point has been moved using the Builder pane, zero all of the transformation configurations under the Attached Geometry property for the Rigid Body.

Alternatively, if probe method is not applicable, you can also switch one of the cameras into grayscale view, right click on the camera in the Cameras view and select Make Reference. This will create a Rigid Body overlay in the Camera view pane to align the Rigid Body pivot using the similar approach as above.

Measurement probe

Calibration block with 4 slots, with approximately 100 mm spacing between each point.

This section provides detailed steps on how to create and use the measurement probe. Please make sure the camera volume has been calibrated successfully before creating the probe. System calibration is important on the accuracy of marker tracking, and it will directly affect the probe measurements.

Creating a probe using the Builder pane

Open the Builder pane under View tab and click Rigid Bodies.

Bring the probe out into the tracking volume and create a Rigid Body from the markers.

Under the Type drop-down menu, select Probe. This will bring up the options for defining a Rigid Body for the measurement probe.

Select the Rigid Body created in step 2.

Place and fit the tip of the probe in one of the slots on the provided calibration block.

Note that there will be two steps in the calibration process: refining Rigid Body definition and calibration of the pivot point. Click Create button to initiate the probe refinement process.

Slowly move the probe in a circular pattern while keeping the tip fitted in the slot; making a cone shape overall. Gently rotate the probe to collect additional samples.

After the refinement, it will automatically proceed to the next step; the pivot point calibration.

Repeat the same movement to collect additional sample data for precisely calculating the location of the pivot or the probe tip.

When sufficient samples are collected, the pivot point will be positioned at the tip of the probe and the Mean Tip Error will be displayed. If the probe calibration was unsuccessful, just repeat the calibration again from step 4.

Once the probe is calibrated successfully, a probe asset will be displayed over the Rigid Body in Motive, and live x/y/z position data will be displayed under the .

Caution

The probe tip MUST remain fitted securely in the slot on the calibration block during the calibration process.

Also, do not press in with the probe since the deformation from compressing could affect the result.

Using the Probe pane for sample collection

Under the Tools tab, open the Probe pane.

Place the probe tip on the point that you wish to collect.

Click Take Sample on the Measurement pane.

A Virtual Reference point is constructed at the location and the coordinates of the point are displayed in the . The points location can be as a .CSV file.

Collecting additional samples will provide distance and angles between collected samples.

You can also use the probe samples to reorient the coordinate axis of the capture volume. The set origin button will position the coordinate space origin at the tip of the probe. And the set orientation option will reorient the capture space by referencing to three sample points.

As the samples are collected, their coordinate data will be written out into the CSV files automatically into the OptiTrack documents folder which is located in the following directory: C:\Users\[Current User]\Documents\OptiTrack. 3D positions for all of the collected measurements and their respective RMSE error values along with distances between each consecutive sample point will be saved in this file.

Also, If needed, you can trigger Motive to export the collected sample coordinate data into a designated directory. To do this, simply click on the export option on the Probe pane.

The location of the probe tip can also be streamed into another application in real-time. You can do this by data-streaming the probe Rigid Body position via NatNet. Once calibrated, the pivot point of the Rigid Body gets positioned precisely at the tip of the probe. The location of a pivot point is represented by the corresponding Rigid Body x-y-z position, and it can be referenced to find out where the probe tip is located.

Reconstruction in motion capture is a process of deriving 3D points from 2D coordinate information obtained from captured images, and the Point Cloud is the core engine that runs the reconstruction process. The reconstruction settings in the Application Settings modifies the engine's parameters for real-time reconstructions. These settings can be modified to optimize the quality of reconstructions in Live mode depending on the conditions of the capture and what you're trying to achieve. Use the 2D Mode to live-monitor the reconstruction outcomes from the configured settings.

See Application Settings: Reconstruction Settings page for more information on each setting.

For details on the reconstruction workflow, read through the Reconstruction and 2D Mode page.

Reconstruction settings for post-processing reconstruction pipelines for recorded captures can be modified under the corresponding Take properties in the .

Take Suffix Format String

Sets the separator (_) and string format specifiers (%03d) for the suffix added after existing file names.

Numeric LEDs

Enable or disable the LED panel in front of cameras that displays assigned camera numbers.

Auto Archive Takes

Enable/Disable auto-archiving of Takes when trimming Takes

Save Data Folder

Motive persists all of the session folders that are imported into the Data pane so that the users don't have to re-import them again after closing out of the application. If this is set to false, the session folders will no longer be persisted, and only the default session folder will always be loaded.

Restore Calibration

Automatically loads the previous, or last saved, calibration setting when starting Motive.

Camera ID

Sets how Camera IDs are assigned for each camera in a setup. Available options are By Location and By Serial Number. When assigning by location, camera IDs will be given following the positional order in clockwise direction, starting from the -X and -Z quadrant in respect to the origin.

Device Profile

Sets the default device profile, XML format, to load onto Motive. The device profile determines and configures the settings for peripheral devices such as force plates, NI-DAQ, or navigation controllers.

Switch to MJPEG

Configures the Aim Assist button. Sets whether the button will switch the camera to MJPEG mode and back to the default camera group record mode. Valid options are: True (default) and False.

Aiming Crosshairs

Sets whether the camera button will display the aiming crosshairs on the MJPEG view of the camera. Valid options are True (default), False.

Aiming Button LED

Enables or disables LED illumination on the Aim Assist button behind Prime Series cameras.

Controls the color of the RGB Status Indicator Ring (Prime Series cameras only). Options include distinct indications for Live, Recording, Playback, Selection and Scene camera statuses, and you can choose the color for the corresponding camera status.

Live Color

(Default: Blue) Sets the indicator ring color for cameras in Live mode. Default: Blue

Recording Color

(Default: Red) Sets the indicator ring color for cameras when recording a capture.

Playback Color

(Default: Black) Sets the indicator ring color for cameras when Motive is in playback mode.

Selection Color

(Default: Yellow) Sets the indicator ring color for cameras that are selected in Motive.

Scene Camera

(Default: Orange) Sets the indicator ring color for cameras that are set as the reference camera in Motive.

LLDP (PoE+) Detection

Enables detection of PoE+ switches by High Power cameras (Prime 17W and Prime 41). LLDP allows the cameras to communicate directly with the switch and determine power availability to increase output to the IR LED rings. When using Ethernet switches that are not PoE+ Enabled or switches that are not LLDP enabled, cameras will not go into the high power mode even with this setting enabled.

Strobe On During Playback

Keeps the camera IR strobe on at all times, even during the playback mode.

A list of all assets associated with the take is displayed in the Assets pane. Here, view the assets and you can right click on an asset to export, remove, or rename selected asset from the current take.

You can also enable or disable assets by checking or unchecking, the box next to each asset. Only enabled assets will be visible in the 3D viewport and used by the auto-labeler to label the markers associated with respective assets.

In the Assets pane, the context menu for involved assets can be accessed by clicking on the or by right-clicking on a selected Take(s). The context menu lists out available actions for the corresponding assets.

Export Rigid Body / Export Skeleton

Exports selected rigid body into Motive trackable files (TRA). Exports selected skeleton into either Motive skeleton file (SKL) or a FBX file.

Remove Asset

Removes the selected asset from a project.

Rename Asset

Renames the selected asset.

Export Markers

Exports skeleton marker template XML file. Exported XML files can be modified and imported again using the Rename Markers or when creating the skeleton in the Skeleton pane.

Rename Markers

Imports skeleton marker template XML file onto the selected asset. If you wish to apply the imported XML for labeling, all of the skeleton markers need to be unlabeled and auto-labeled again.

Update Markers

Imports the default skeleton marker template XML files. This feature can be used to update skeleton assets that are created before Motive 1.10 to include marker colors and sticks.

Recalibrate From Markers

Re-calibrates an existing skeleton. This feature is essentially same as re-creating a skeleton using the same skeleton Marker Set. See Skeleton Tracking page for more information on using the skeleton template XML files.

Generate Markers

This option colors the labeled markers and creates marker sticks that inter-connects between each of consecutive labels. More specifically, this will modify the marker XML file. It adds values to the color attributes and generates Marker Stick elements so that users can export the markers and easily modify the colors and sticks as needed. For more information: Marker XML Files.

CS-200:

Long arm: Positive z

Short arm: Positive x

Vertical offset: 19 mm

Marker size: 14 mm (diameter)

CS-400: Used for general for common mocap applications. Contains knobs for adjusting the balance as well as slots for aligning with a force plate.

Long arm: Positive z

Short arm: Positive x

Vertical offset: 45 mm

Marker size: 19 mm (diameter)

Legacy L-frame square: Legacy calibration square designed before changing to the Right-hand coordinate system.

Long arm: Positive z

Short arm: Negative x

Rotate view

Right + Drag

Pan view

Middle (wheel) click + drag

Zoom in/out

Mouse Wheel

Select in View

Left mouse click

Toggle Selection in View

CTRL + left mouse click

The new Continuous Calibration feature ensures your system always remains optimally calibrated, requiring no user intervention to maintain the tracking quality. It uses highly sophisticated algorithms to evaluate the quality of the calibration and the triangulated marker positions. Whenever the tracking accuracy degrades, Motive will automatically detect and update the calibration and provide the most globally optimized tracking system.

Ease of use. This feature provides much easier user experience because the capture volume will not have to be re-calibrated as often, which will save a lot of time. You can simply enable this feature and have Motive maintain the calibration quality.

Optimal tracking quality. Always maintains the best tracking solution for live camera systems. This ensures that your captured sessions retain the highest quality calibration. If the system receives inadequate information from the environment, the calibration with not update and your system never degrades based on sporadic or spurious data. A moderate increase in the number of real optical tracking markers in the volume and an increase in camera overlap improves the likelihood of a higher quality update.

For continuous calibration to work as expected, the following criteria must be met:

Live Mode Only. Continuous calibration only works in . It will not be active during Recording or while in .

Markers Must Be Tracked. Continuous calibration looks at tracked reconstructions to assess and update the calibration. Therefore, at least some number of markers must be tracked within the volume.

Majority of Cameras Must See Markers. A majority of cameras in a volume needs to receive some tracking data within a portion of their field of view in order to initiate the calibration process. Because of this, traditional perimeter camera systems typically work the best. Each camera should additionally see at least 4 markers for optimal calibration.

There are two different modes of continuous calibration: Continuous and Continuous + Bumped.

The Continuous mode is used to maintain the calibration quality, and this should be utilized in most cases. In this mode, Motive monitors how well the converge onto tracked markers, and it updates the calibration so corresponding tracked rays converge more precisely. This mode is capable of correcting minor degradations that result from ambient influences, such as the thermal expansions on the camera mounting structure.

This mode requires markers to be seen by all of the cameras in the system in order for the calibration to be updated.

The Continuous + Bumped mode combines the continuous calibration refinement described above with the ability to resolve and repair cameras that have been bumped and are no longer contributing to 3D reconstruction. By utilizing this feature, the bumped camera will automatically resolve and be reintroduced into the calibration without requiring the user to perform a manual calibration. For just maintaining overall calibration quality, the Continuous mode should be used instead of the Continuous + Bumped mode.

The continuous calibration can be enabled or disabled in the Application Settings pane under the reconstruction tab. Set the Continuous Calibration setting to Continuous, or Continuous + Bumped to allow the feature to update the system calibration.

The status of continuous calibration can be monitored on the panel.

Under the -> Reconstruction tab, set the continuous calibration to Continuous.

Once enabled, Motive continuously monitors the residual values in captured marker reconstructions. When the residual value increases, Motive will start sampling data for continuous calibration.

Make sure at least some number of markers are being tracked in the volume.

Duo/Trio Tracking Bars: Duo/ Trio tracking bars can utilize this feature to update the calibration of tracking bars to improve tracking quality.

When a camera is bumped and its orientation have been shifted greatly, the affected camera will no longer properly contribute to the tracking. As a result, there will be a lot of untracked rays generated by this camera.

Under the Application Settings -> Reconstruction tab, set the continuous calibration to Continuous + Bumped Camera.

Make sure there are one or more 3D reconstructed markers in motion within the field of view of the bumped camera.

Do not use continuous calibration for updating calibration with cameras that have been moved significantly or repositioned entirely. While this feature may be able to handle such cases, this is not the intended use. When a camera is moved, you will need to manually calibrate the volume again for the best tracking quality.

Anchor markers can be set up in Motive to further improve continuous calibration. When properly configured, anchor markers improve continuous calibration updates, especially on systems that consist of multiple sets of cameras that are separated into different tracking areas, by obstructions or walls, without camera view overlap. It also provides extra assurance that the global origin will not shift during each update; although the continuous calibration feature itself already checks for this.

Follow the below steps for setting up the active anchor marker in Motive:

Adding Anchor Markers in Motive

First, make sure the entire camera volume is fully and prepared for marker tracking.

Place any number of markers in the volume to assign them as the anchor markers.

Make sure these markers are securely fixed in place within the volume. It's important that the distances between these markers do not change throughout the continuous calibration updates.

OptiTrack motion capture systems can use both passive and active markers as indicators for 3D position and orientation. An appropriate marker setup is essential for both tracking the quality and reliability of captured data. All markers must be properly placed and must remain securely attached to surfaces throughout the capture. If any markers are taken off or moved, they will become unlabeled from the Marker Set and will stop contributing to the tracking of the attached object. In addition to marker placements, marker counts and specifications (sizes, circularity, and reflectivity) also influence the tracking quality. Passive (retroreflective) markers need to have well-maintained retroreflective surfaces in order to fully reflect the IR light back to the camera. Active (LED) markers must be properly configured and synchronized with the system.

OptiTrack cameras track any surfaces covered with retroreflective material, which is designed to reflect incoming light back to its source. IR light emitted from the camera is reflected by passive markers and detected by the camera’s sensor. Then, the captured reflections are used to calculate the 2D marker position, which is used by Motive to compute 3D position through reconstruction. Depending on which markers are used (size, shape, etc.) you may want to adjust the camera filter parameters from the Live Pipeline settings in .

The size of markers affects visibility. Larger markers stand out in the camera view and can be tracked at longer distances, but they are less suitable for tracking fine movements or small objects. In contrast, smaller markers are beneficial for precise tracking (e.g. facial tracking and microvolume tracking), but have difficulty being tracked at long distances or in restricted settings and are more likely to be occluded during capture. Choose appropriate marker sizes to optimize the tracking for different applications.

If you wish to track non-spherical retroreflective surfaces, lower the Circularity value in in the application settings. This adjusts the circle filter threshold and non-circular reflections can also be considered as markers. However, keep in mind that this will lower the filtering threshold for extraneous reflections as well. If you wish to track non-spherical retroreflective surfaces, lower the Circularity value from the in the application settings.

All markers need to have a well-maintained retroreflective surface. Every marker must satisfy the brightness Threshold defined from the to be recognized in Motive. Worn markers with damaged retroreflective surfaces will appear to a dimmer image in the camera view, and the tracking may be limited.

Pixel Inspector: You can analyze the brightness of pixels in each camera view by using the pixel inspector, which can be enabled from the .

Please contact our to decide which markers will suit your needs.

OptiTrack cameras can track any surface covered with retro-reflective material. For best results, markers should be completely spherical with a smooth and clean surface. Hemispherical or flat markers (e.g. retro-reflective tape on a flat surface) can be tracked effectively from straight on, but when viewed from an angle, they will produce a less accurate centroid calculation. Hence, non-spherical markers will have a less trackable range of motion when compared to tracking fully spherical markers.

OptiTrack's active solution provides advanced tracking of IR LED markers to accomplish the best tracking results. This allows each marker to be labeled individually. Please refer to the page for more information.

Active (LED) markers can also be tracked with OptiTrack cameras when properly configured. We recommend using OptiTrack’s Ultra Wide Angle 850nm LEDs for active LED tracking applications. If third-party LEDs are used, their illumination wavelength should be at 850nm for best results. Otherwise, light from the LED will be filtered by the band-pass filter.

If your application requires tracking LEDs outside of the 850nm wavelength, the OptiTrack camera should not be equipped with the 850nm band-pass filter, as it will cut off any illumination above or below the 850nm wavelength. An alternative solution is to use the 700nm short-pass filter (for passing illumination in the visible spectrum) and the 800nm long-pass filter (for passing illumination in the IR spectrum). If the camera is not equipped with the filter, the Filter Switcher add-on is available for purchase at our . There are also other important considerations when incorporating active markers in Motive:

Place a spherical diffuser around each LED marker to increase the illumination angle. This will improve the tracking since bare LED bulbs have limited illumination angles due to their narrow beamwidth. Even with wide-angle LEDs, the lighting coverage of bare LED bulbs will be insufficient for the cameras to track the markers at an angle.

If an LED-based marker system will be strobed (to increase range, offset groups of LEDs, etc.), it is important to synchronize their strobes with the camera system. If you require a LED synchronization solution, please contact one of our to learn more about OptiTrack’s RF-based LED synchronizer.

Proper marker placement is vital for quality of motion capture data because each marker on a tracked subject is used as indicators for both position and orientation. When an asset (a Rigid Body or Skeleton) is created in Motive, its unique spatial relationships of the markers are calibrated and recorded. Then, the recorded information is used to recognize the markers in the corresponding asset during the process. For best tracking results, when multiple subjects with a similar shape are involved in the capture, it is necessary to offset their marker placements to introduce the asymmetry and avoid the congruency.

Read more about marker placements from the page and the page.

Asymmetry

Asymmetry is the key to avoiding the congruency for tracking multiple Marker Sets. When there are more than one similar marker arrangements in the volume, marker labels may be confused. Thus, it is beneficial to place segment makers — joint markers must always be placed on anatomical landmarks — in asymmetrical positions for similar Rigid Bodies and Skeletal segments. This provides a clear distinction between two similar arrangements. Furthermore, avoid placing markers in a symmetrical shape within the segment as well. For example, a perfect square marker arrangement will have ambiguous orientation and frequent mislabels may occur throughout the capture. Instead, follow the rule of thumb of placing the less critical markers in asymmetrical arrangements.